NVIDIA Data Flywheel Blueprint

Deploying AI agents at scale introduces significant challenges, including high compute costs, latency bottlenecks, and model drift—especially in performance-critical environments. Balancing model accuracy with efficiency often requires complex workflows and ongoing manual intervention.

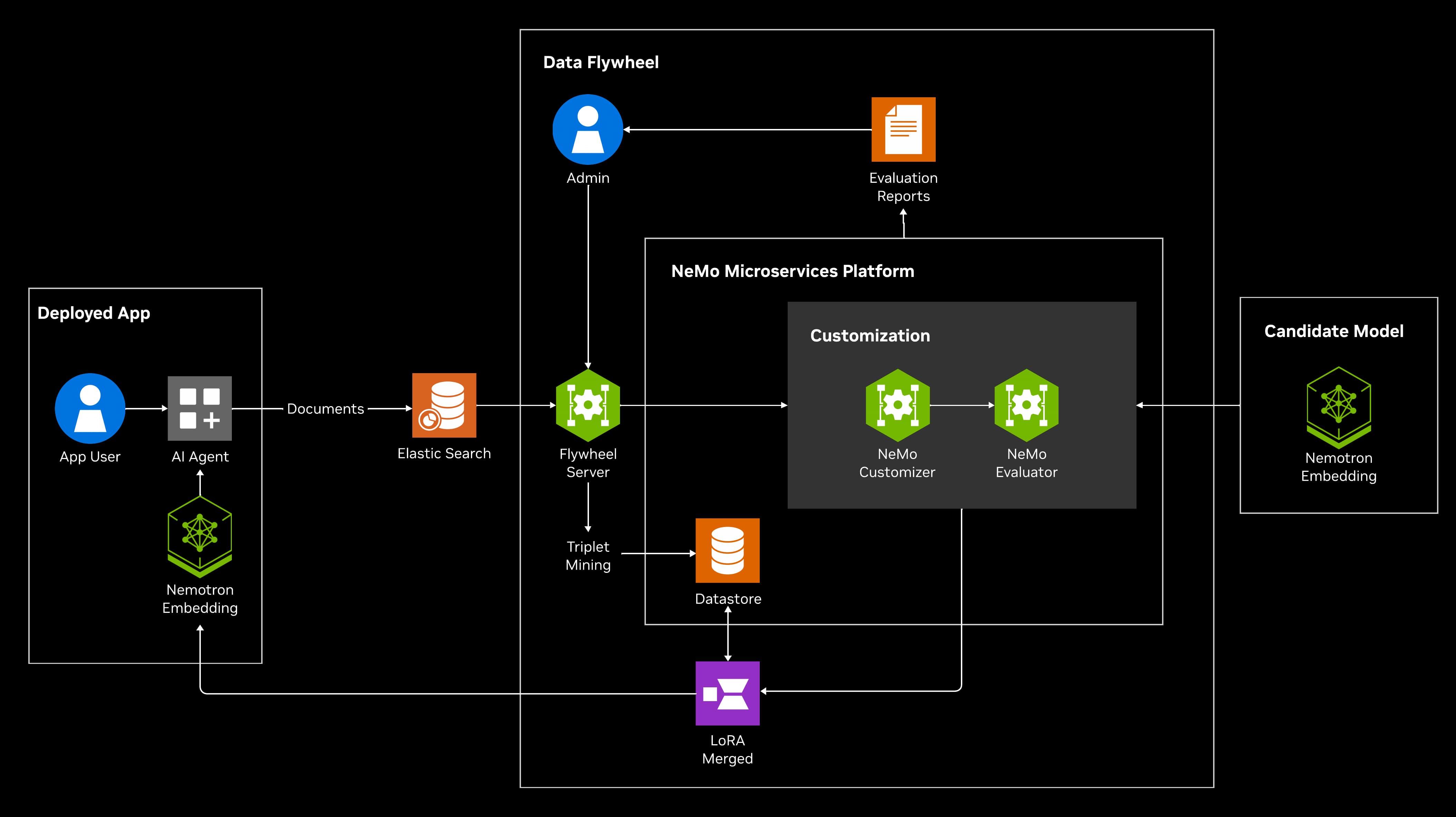

The NVIDIA Data Flywheel Blueprint solves this by creating a self-reinforcing, automated loop that continuously improves models using real-world feedback. It collects production traffic logs, evaluates performance, fine-tunes models, and redeploys optimized versions—maintaining accuracy while reducing resource demands. Built with NVIDIA NeMo and open NVIDIA Nemotron models, optimized and packaged as NVIDIA NIM. This blueprint transforms AI workflows into adaptive systems that learn from experience.

Use Cases

1. LLM Optimization for Cost and Latency

Fine-tune smaller candidate models to match the performance of larger ones while reducing inference latency and total cost of ownership.

- Techniques: Model distillation, DPO-based preference optimization, tool-calling accuracy improvements.

- Outcome: Faster, leaner models that meet enterprise-grade accuracy targets and optimize for latency and TCO.

- Get started with this launchable

Architecture Diagram

2. Embedding Accuracy Optimization

Improve the baseline accuracy of embedding models powering semantic search, RAG, and similarity-based applications.

- Techniques: Parameter-efficient fine-tuning (PEFT) and supervised fine-tuning (SFT) using NeMo Customizer.

- Outcome: Up to 17–20% accuracy improvement in internal tests, ensuring embeddings remain relevant as data evolves.

- Get started with this launchable

Architecture Diagram

Key Benefits

- Reduce Latency and Cost: Identifies smaller models that are empirically equivalent, enabling deployment of more efficient NIM microservices while maintaining performance.

- Accuracy Gains: Improve embedding model precision for domain-specific tasks.

- Continuous Improvement Loop: Enables ongoing evaluation without retraining or relabeling—true "flywheel" behavior that runs indefinitely as new traffic flows in.

- Data-Driven Decisions: Provides real comparisons across models using real traffic, backed by evaluator scores.

- Standardized Optimization: Any application can opt into the flywheel with minimal effort, making it a foundational component for a wide variety of use cases.

- Flexible Integration: Deploy in cloud, data center, or edge environments using Helm charts and REST APIs for seamless integration. Developers can choose any supported model—including the NVIDIA Nemotron family of open models—to build workflows for frontier AI development. Nemotron models offer one of the most open ecosystems in AI, with permissive licenses, transparent data practices, and detailed technical documentation.

Key Features

- Production Data Pipeline: Collects real-world data from AI agent interactions and curates datasets from configurable log stores for evaluation, in-context learning, and fine-tuning.

- Automated Model Experimentation: Leverages a deployment manager to dynamically spin up candidate NIMs from a model registry—including smaller or fine-tuned variants—and run experiments such as in-context learning and LoRA-based fine-tuning.

- Semi-autonomous Operation: Operates without requiring any labeled data or human-in-the-loop curation.

- Evaluation with NVIDIA NeMo Evaluator: Evaluates models using custom metrics and task-specific benchmarks (e.g., tool-calling accuracy), leveraging large language models (LLMs) as automated judges to reduce the need for human intervention.

- LoRA-SFT Fine-Tuning with NVIDIA NeMo Customizer: Runs parameter-efficient fine-tuning on models using real-world data.

- REST API Service: Provides intuitive REST APIs for seamless integration into existing systems and workflows, running continuously as a FastAPI-based service that orchestrates underlying NeMo Microservices.

- Easy Deployment with Helm Chart: Simplifies deployment and management of the Data Flywheel Blueprint on Kubernetes setup with a unified, configurable Helm chart.

Minimum System Requirements

Hardware Requirements

- With Self-hosted LLM Judge: 6× (NVIDIA H100, B200, A100 GPUs)

- With Remote LLM Judge: 2× (NVIDIA H100, B200, or A100 GPUs)

- Minimum Memory: 1GB (512MB reserved for Elasticsearch)

- Storage: Varies based on log volume and model size

- Network: Ports 8000 (API), 9200 (Elasticsearch), 27017 (MongoDB), 6379 (Redis)

OS Requirements

Software Dependencies

- Elasticsearch 8.19.7

- MongoDB 7.0.25

- Redis 7.4.7-alpine

- FastAPI (API server)

- Celery (task processing)

- Python 3.12

- MLflow 3.6.0

- One of the following orchestration tools:

- Docker Engine & Docker Compose (for local development and simple, single-host environments)

- Kubernetes (>=1.25) and Helm (>=3.8) (for production and multi-node clusters)

Software Used in This Blueprint

NVIDIA Technology

- Nemotron models packaged as NIM microservices:

- NeMo:

- NeMo Customizer: Model fine-tuning

- NeMo Evaluator: Model and workflow evaluation

- Datastore: Stores datasets, evaluation results, fine-tuning artifacts

- Deployment Manager: Deploys and experiments with candidate models

- Entity Store: Unified data model and registry

- NIM Proxy: Routes inference across multiple models

3rd Party Software

- Elasticsearch

- MongoDB

- Redis

- FastAPI

- Celery

- MLflow

Ethical Considerations

NVIDIA believes Trustworthy AI is a shared responsibility, and we have established policies and practices to enable development for a wide array of AI applications. When downloaded or used in accordance with our terms of service, developers should work with their supporting model team to ensure the models meet requirements for the relevant industry and use case and address unforeseen product misuse. Please report security vulnerabilities or NVIDIA AI concerns here.

License

Use of the models in this blueprint is governed by the NVIDIA AI Foundation Models Community License.

Terms of Use

The microservices and NIMs are governed by the NVIDIA Software License Agreement and Product-Specific Terms for NVIDIA AI Products. Use of the models is governed by the NVIDIA Community Model License, except for Llama-3.2-NV-Embedqa-1b-v2 and Llama-3.1-Nemotron-Nano-8B-v1, use of which is governed by the NVIDIA Open Model License. The NVIDIA dataset is licensed under the Creative Commons Attribution 4.0 International License (CC BY 4.0). The other blueprint software and materials, including the orchestrator, Jupyter notebook, docker installer, container and documentation, are governed by the Apache License 2.0.

Additional Information

Llama 3.1 Community License Agreement for Llama-3.1-8B-Instruct, Llama-3.1-70B-Instruct and Llama-3.1-Nemotron-Nano-8B-v1. Llama 3.2 Community License Agreement for Llama-3.2-3B-Instruct, Llama-3.2-1B-Instruct and Llama-3.2-NV-Embedqa-1b-v2. Llama 3.3 Community License Agreement for Llama-3.3-70B-Instruct. Built with Llama.