State-of-the-art 685B reasoning LLM with sparse attention, long context, and integrated agentic tools.

A state-of-the-art general purpose MoE VLM ideal for chat, agentic and instruction based use cases.

A general purpose VLM ideal for chat and instruction based use cases

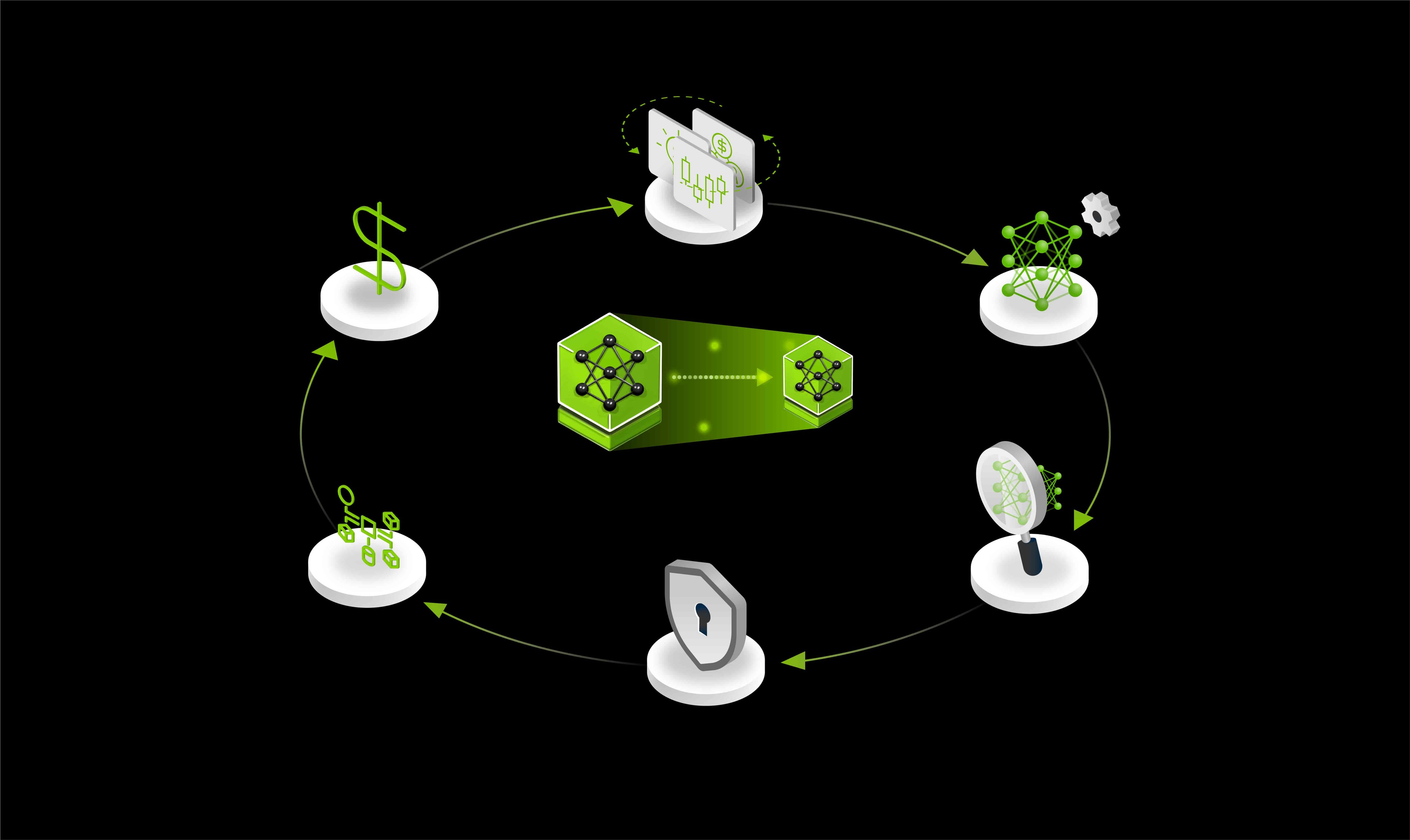

Distill and deploy domain-specific AI models from unstructured financial data to generate market signals efficiently—scaling your workflow with the NVIDIA Data Flywheel Blueprint for high-performance, cost-efficient experimentation.

Open Mixture of Experts LLM (230B, 10B active) for reasoning, coding, and tool-use/agent workflows

Build advanced AI agents for providers and patients using this developer example powered by NeMo Microservices, NVIDIA Nemotron, Riva ASR and TTS, and NVIDIA LLM NIM

Leading multilingual content safety model for enhancing the safety and moderation capabilities of LLMs

Securely extract, embed, and index multimodal data with encryption in-use for fast, accurate semantic search.

DeepSeek-V3.1: hybrid inference LLM with Think/Non-Think modes, stronger agents, 128K context, strict function calling.

ByteDance open-source LLM with long-context, reasoning, and agentic intelligence.

High‑efficiency LLM with hybrid Transformer‑Mamba design, excelling in reasoning and agentic tasks.

FLUX.1 Kontext is a multimodal model that enables in-context image generation and editing.

Smaller Mixture of Experts (MoE) text-only LLM for efficient AI reasoning and math

Mixture of Experts (MoE) reasoning LLM (text-only) designed to fit within 80GB GPU.

Multilingual 7B LLM, instruction-tuned on all 24 EU languages for stable, culturally aligned output.

Multi-modal model to classify safety for input prompts as well output responses.

Multimodal question-answer retrieval representing user queries as text and documents as images.

Use the multi-LLM compatible NIM container to deploy a broad range of LLMs from Hugging Face.

Build advanced AI agents within the biomedical domain using the AI-Q Blueprint and the BioNeMo Virtual Screening Blueprint

Improve safety, security, and privacy of AI systems at build, deploy and run stages.

Automate and optimize the configuration of radio access network (RAN) parameters using agentic AI and a large language model (LLM)-driven framework.

SOTA LLM pre-trained for instruction following and proficiency in Indonesian language and its dialects.

Efficient multimodal model excelling at multilingual tasks, image understanding, and fast-responses

Powerful, multimodal language model designed for enterprise applications, including software development, data analysis, and reasoning.

A general purpose multimodal, multilingual 128 MoE model with 17B parameters.

A multimodal, multilingual 16 MoE model with 17B parameters.

Build a custom enterprise research assistant powered by state-of-the-art models that process and synthesize multimodal data, enabling reasoning, planning, and refinement to generate comprehensive reports.

End-to-end autonomous driving stack integrating perception, prediction, and planning with sparse scene representations for efficiency and safety.

Cutting-edge open multimodal model exceling in high-quality reasoning from images.

A lightweight, multilingual, advanced SLM text model for edge computing, resource constraint applications

Route LLM requests to the best model for the task at hand.

Lightweight multilingual LLM powering AI applications in latency bound, memory/compute constrained environments

Cutting-edge open multimodal model exceling in high-quality reasoning from image and audio inputs.

State-of-the-art, high-efficiency LLM excelling in reasoning, math, and coding.

Power fast, accurate semantic search across multimodal enterprise data with NVIDIA’s RAG Blueprint—built on NeMo Retriever and Nemotron models—to connect your agents to trusted, authoritative sources of knowledge.

Topic control model to keep conversations focused on approved topics, avoiding inappropriate content.

Industry leading jailbreak classification model for protection from adversarial attempts

Leading content safety model for enhancing the safety and moderation capabilities of LLMs

NVIDIA DGX Cloud trained multilingual LLM designed for mission critical use cases in regulated industries including financial services, government, heavy industry

Instruction tuned LLM achieving SoTA performance on reasoning, math and general knowledge capabilities

Multilingual LLM with emphasis on European languages supporting regulated use cases including financial services, government, heavy industry

Chinese and English LLM targeting for language, coding, mathematics, reasoning, etc.

Multi-modal vision-language model that understands text/img/video and creates informative responses

Advanced LLM for code generation, reasoning, and fixing across popular programming languages.

Advanced LLM for reasoning, math, general knowledge, and function calling

Advanced AI model detects faces and identifies deep fake images.

Create intelligent virtual assistants for customer service across every industry

A bilingual Hindi-English SLM for on-device inference, tailored specifically for Hindi Language.

Chinese and English LLM targeting for language, coding, mathematics, reasoning, etc.

Robust image classification model for detecting and managing AI-generated content.

Sovereign AI model trained on Japanese language that understands regional nuances.

Cutting-edge open multimodal model exceling in high-quality reasoning from images.

Cutting-edge MOE based LLM designed to excel in a wide array of generative AI tasks.

Optimized SLM for on-device inference and fine-tuned for roleplay, RAG and function calling

Lightweight multilingual LLM powering AI applications in latency bound, memory/compute constrained environments

Advanced state-of-the-art LLM with language understanding, superior reasoning, and text generation.

Advanced state-of-the-art LLM with language understanding, superior reasoning, and text generation.

Guardrail model to ensure that responses from LLMs are appropriate and safe

Advanced LLM for synthetic data generation, distillation, and inference for chatbots, coding, and domain-specific tasks.

Advanced LLM to generate high-quality, context-aware responses for chatbots and search engines.

This LLM follows instructions, completes requests, and generates creative text.

LLM for improved language comprehension and chatbot-oriented capabilities in Traditional Chinese.

Visual Changenet detects pixel-level change maps between two images and outputs a semantic change segmentation mask

EfficientDet-based object detection network to detect 100 specific retail objects from an input video.

LLM to represent and serve the linguistic and cultural diversity of Southeast Asia

Lightweight, state-of-the-art open LLM with strong math and logical reasoning skills.

Lightweight, state-of-the-art open LLM with strong math and logical reasoning skills.

An MOE LLM that follows instructions, completes requests, and generates creative text.

Advanced state-of-the-art LLM with language understanding, superior reasoning, and text generation.

This LLM follows instructions, completes requests, and generates creative text.

An MOE LLM that follows instructions, completes requests, and generates creative text.