Blueprint Overview

Use Case Description

The NVIDIA AI Blueprint for Video Search and Summarization (VSS) makes it easy to start building and customizing video analytics AI agents. These insightful, accurate, and interactive agents are powered by generative AI, vision language models (VLMs), large language models (LLMs), and NVIDIA NIM™ Microservices—helping a variety of industries make better decisions, faster. They can be given tasks through natural language and perform complex operations like video summarization and visual question-answering, unlocking entirely new application possibilities.

Test the VSS blueprint on the cloud with Launchable, a set of pre-configured sandbox instances that let you quickly try the blueprint without having to bring your own compute infrastructure.

Key Features

- Real-time and batch processing modes

- Video search

- Video summarization

- Interactive Question and Answering (Q&A)

- Alerts

- Event reviewer and verification

- Object tracking

- Multimodal model fusion

- Audio support

Key Benefits

- Build video analytics AI agents that can analyze, interpret, and process vast amounts of video data at scale.

- Produce summaries of long videos up to 100X faster than going through the videos manually.

- Accelerate development time by bringing together various generative AI models and services to quickly build AI agents.

- Augment traditional computer vision pipelines with VLMs to provide deep video understanding.

- Provide a range of optimized deployments, from the enterprise edge to cloud.

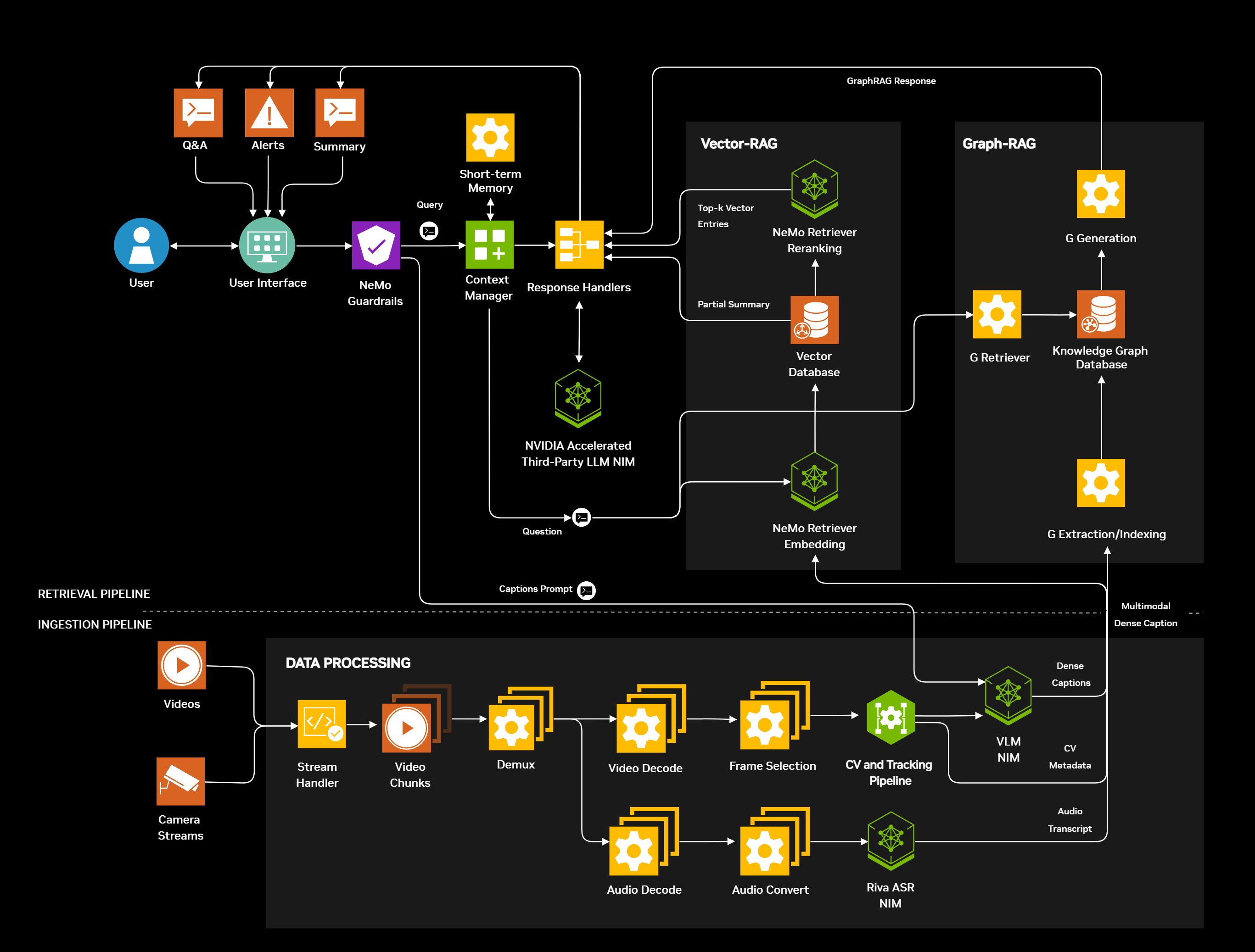

Architecture Diagram

Included NIM

The following NIM microservices are used in this blueprint:

Minimum System requirements

Core engine

The core video search and summarization blueprint pipeline supports the following hardware:

- RTX Pro 6000 WS/SE

- DGX Spark

- Jetson Thor

- B200

- H200

- H100

- A100

- L40/L40S

- A6000

Hosted NIMs

- Llama-3.1-70b-instruct requires the following minimum GPU configuration based on this support matrix.

- Cosmos Reason 2 VLM requires 1xL40s as a minimum GPU.

- NeMo Retriever Embedding NIM requires 1xL40S as a minimum GPU.

- NeMo Reranking Embedding NIM requires 1xL40S as a minimum GPU.

- Parakeet-ctc-0.6b-ASR requires the following minimum GPU configuration based on this support matrix

Minimum Local Deployment Configuration

The following configurations have been validated as minimal, local deployments.

- 1 x RTX Pro 6000 WS/SE/DGX Spark/Jetson Thor/B200/H100/H200/A100 (80 GB)

- 4 x L40/L40S/A6000

What’s included in the Blueprint

NVIDIA AI Blueprints are customizable agentic workflow examples that include NIM microservices, reference code, documentation, and a Helm chart for deployment. This blueprint gives you a reference architecture to deploy a visual agent that can quickly generate insights from stored and streamed video through a scalable video ingestion pipeline, VLMs, and hybrid-RAG modules.

Core Technology

- Data processing pipeline: The process involves decoding video segments (chunks) generated by the stream handler, selecting frames, and using a vision-language model (VLM) along with a caption prompt to generate detailed captions for each chunk. These dense captions can be enhanced with object tracking metadata and audio transcriptions captured in the respective pipelines These dense captions are then indexed into vector and graph databases for use in the Context-Aware Retrieval-Augmented Generation workflow.

- Context Manager: Efficiently incorporates tools—a vision-language model (VLM) and a large language model (LLM), using them as required—and key functions including a summary generator, an answer generator, and an alert handler. The tools and functions are used in summary generation, handling Q&A, and managing alerts. In addition, context manager effectively maintains its working context by making efficient use of both short-term memory, such as chat history, and long-term memory resources like vector and graph databases, as needed.

- CA-RAG module: The Context-Aware Retrieval-Augmented Generation (CA-RAG) module leverages both Vector RAG and Graph-RAG as the primary sources for video understanding. During the Q&A workflow, the CA-RAG module extracts relevant context from the vector database and graph database to enhance temporal reasoning, anomaly detection, multi-hop reasoning, and scalability, thereby offering deeper contextual understanding and efficient management of extensive video data.

- NeMo Guardrails: Filters out invalid user prompts. It makes use of the REST API of an LLM NIM microservice.

Example Walkthrough

The user selects an example video and prompt to guide the agent in generating a detailed summary. The agent splits the input video into smaller segments that are processed by a VLM (The preview uses OpenAI's GPT4o). These segments are processed in parallel by the VLM pipeline to produce detailed captions describing the events of each chunk in a scalable and efficient manner. The agent recursively summarizes the dense captions using an LLM, generating a final summary for the entire video once all chunk captions are processed.

We also provide examples to also demonstrate the computer vision pipeline with object tracking as well as audio support.

Additionally, these captions are stored in vector and graph databases to power the Q&A feature of this blueprint, allowing the user to ask any open-ended questions about the video.

License

Use of the models in this blueprint is governed by the NVIDIA AI Foundation Models Community License.

Terms of Use

GOVERNING TERMS: This preview is governed by the NVIDIA API Trial Terms of Service.

Additional Information:

For the model that includes a Llama3.1 model: Llama 3.1 Community License Agreement, Built with Llama.

For the NVIDIA Retrieval QA Llama 3.2 1B Embedding v2 and NVIDIA Retrieval QA Llama 3.2 1B Reranking v2: Llama 3.2 Community License Agreement, Built with Llama.

For https://github.com/google-research/big_vision/blob/main/LICENSE and https://github.com/01-ai/Yi/blob/main/LICENSE: Apache 2.0 license.