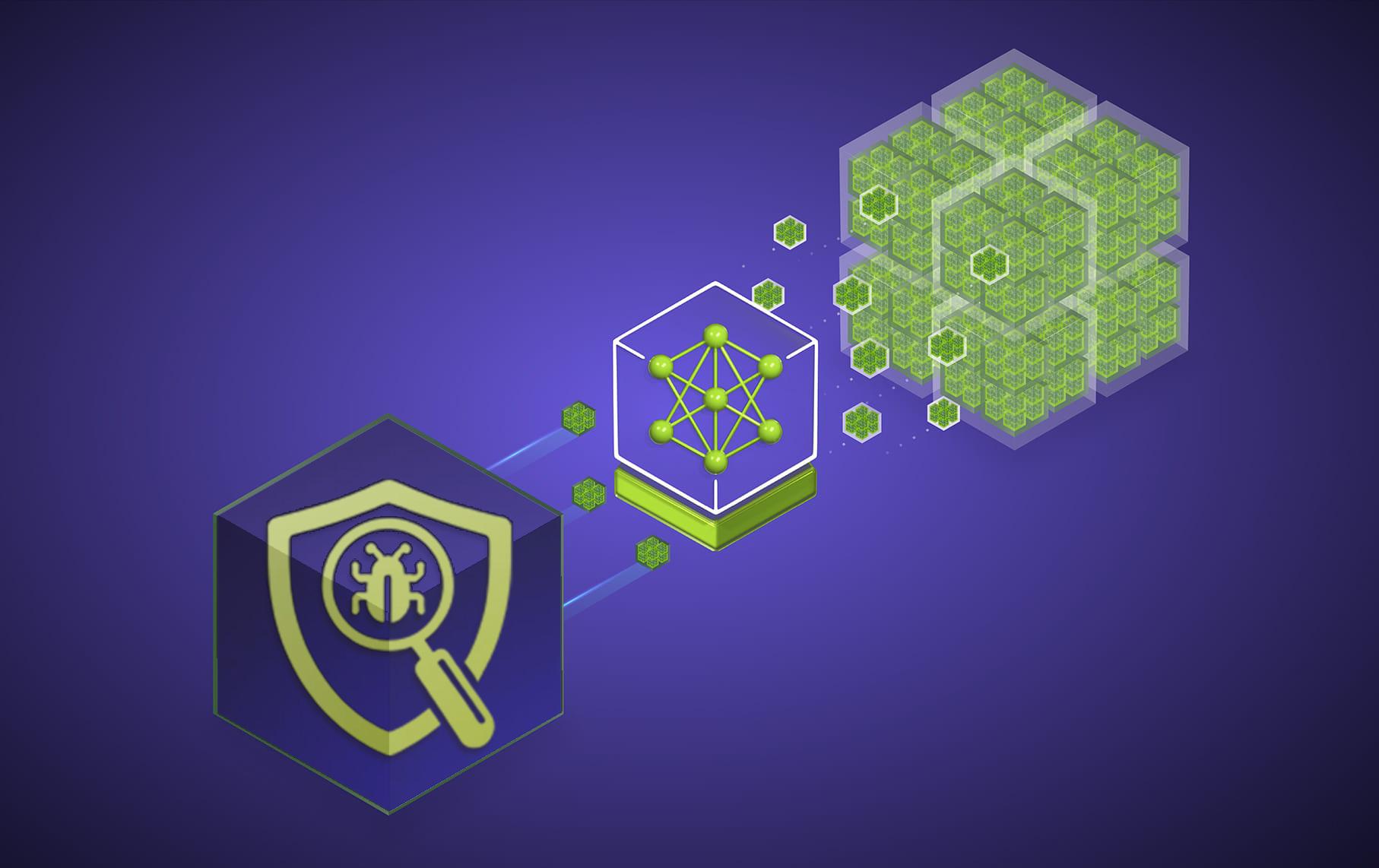

This experience showcases vulnerability analysis for container security using NVIDIA NIM microservices and NVIDIA NeMo Agent Toolkit. The NIM Agent Blueprint demonstrates accelerated analysis on common vulnerabilities and exposures (CVE) at an enterprise scale, reducing mitigation from days and hours to just seconds. While traditional methods require substantial manual effort to pinpoint solutions for vulnerabilities, these technologies enable quick, automatic, and actionable CVE risk analysis using large language models (LLMs) and retrieval-augmented generation (RAG). With this blueprint, security analysts can expedite the process of determining whether a software package includes exploitable and vulnerable components using LLMs and event-driven RAG triggered by the creation of a new software package or the detection of a CVE.

Patching software security issues is becoming increasingly challenging as the rate of new reports into the CVE database accelerates. Hundreds of pieces of information must be retrieved, understood, and integrated to triage a single container for these vulnerabilities. Clearly, the traditional approach to scanning and patching has become unmanageable.

Generative AI can improve vulnerability defense while decreasing the load on security teams. Using NVIDIA NIM microservices and the NeMo Agent Toolkit, the NIM Agent Blueprint accelerates CVE analysis at enterprise scale, dramatically reducing time to assess from days to just seconds.

The LLM expedites the manual work of a human security analyst by properly and thoroughly researching and investigating reported CVE risks to confirm vulnerabilities, find false positives, generate investigation checklists of tasks, and determine true exploitability.

After the required data is processed, a unique checklist is generated and sent to agents, and analysis is looped until all checklist items are triaged. The application then summarizes the findings, generates action justifications, and passes them to a human analyst to decide appropriate next steps.

In this way, security analysts can cut through the noise of the increasing number CVEs to focus on the most critical security tasks.

The following are used by this blueprint:

Hardware Requirements

The vulnerability analysis workflow supports the following hardware (only required if self-hosting NIMs):

OS Requirements

Inference

Example Container and CVEs

Containers:

Vulnerability Alerts:

GHSA-3f63-hfp8-52jq: Arbitrary Code Execution in Pillow

CVE-2024-21762: Fortinet FortiOS SSL VPN out-of-bounds write allows remote code execution

CVE-2022-47695: Binutils objdump denial of service via malformed Mach-O files

The blueprint operates using a Plan-and-Execute-style LLM workflow for CVE impact analysis. It does not include the initial CVE risk analysis tooling. The workflow is adaptable, supporting various LLM services such as NIM microservices and OpenAI, including all LLMs supported by NeMo Agent Toolkit.

Security Scan Result

The workflow begins by inputting the identified CVEs from a container security scan as input. This can be generated from a container image scanner of your choosing such as Anchore.

Code Repository and Documentation

The blueprint pulls code repositories and documentation provided by the user. These repositories are processed through an embedding model, and the resulting embeddings are stored in vector databases (VDBs) for the agent's reference.

Vector Database

Various vector databases can be used for the embedding. We currently utilize FAISS for the VDB because it does not require an external service and is simple to use. Any vector store can be used, such as NVIDIA cuVS, which would provide accelerated indexing and search.

Web Vulnerability Intel

The system collects detailed information about each CVE through web scraping and data retrieval from various public security databases, including GHSA, Redhat, Ubuntu, and NIST CVE records, as well as tailored threat intelligence feeds.

SBOM

The provided Software Bill of Materials (SBOM) document is processed into a software-ingestible format for the agent's reference. SBOMs can be generated for any container using the open-source tool Syft.

Lexical Search

As an alternative, a lexical search is available for use cases where creating an embedding is impractical due to a large number of source files in the target container.

PreProcessing

All the above actions are encapsulated by multiple NeMo Agent Toolkit functions to prepare the data for use with the LLM engine.

Checklist Generation

Leveraging the gathered information about each vulnerability, the checklist generation node creates a tailored, context-sensitive task checklist designed to guide the impact analysis.

Task Agent

At the core of the process is an LLM agent iterating through each item in the checklist. For each item, the agent answers the question using a set of tools which provide information about the target container. The tools tap into various data sources (web intel, vector DB, search etc.), retrieving relevant information to address each checklist item. The loop continues until the agent resolves each checklist item satisfactorily.

Summarization

Once the agent has compiled findings for each checklist item, these results are condensed by the summarization node into a concise, human-readable paragraph.

Justification

Given the summary, the justification status categorization node then assigns a resulting VEX (Vulnerability Exploitability eXchange) status to the CVE.

We provided a set of predefined categories for the model to choose from. If the CVE is deemed exploitable, the reasoning category is "vulnerable." If it is not exploitable, there are 10 different reasoning categories to explain why the vulnerability is not exploitable in the given environment:

false_positive

code_not_present

code_not_reachable

requires_configuration

requires_dependency

requires_environment

protected_by_compiler

protected_at_runtime

protected_by_perimeter

protected_by_mitigating_control

At the end of the workflow run, an output including all the gathered and generated information is prepared for security analysts for a final review.

Note: All output should be vetted by a security analyst before being used in a cybersecurity application.

NIM microservices

The NeMo Agent Toolkit can utilize various LLM endpoints and is optimized to use NVIDIA NIM microservices. The current default for all of the NIM LLM models is llama-3.1-70b-instruct with specifically tailored prompt engineering and edge case handling. Other models are able to be substituted, such as smaller, fine-tuned NIM models or other external LLM services. Subsequent updates will provide more details about fine-tuning and data flywheel techniques.

Note: Within the NeMo Agent Toolkit workflow, the LangChain framework is employed to deploy all LLMs and agents, and the LangGraph framework is used for orchestration, streamlining efficiency and reducing the need for duplicative efforts.

Note: Routinely checked validation datasets are critical to ensuring proper and consistent outputs.

To explore examples walkthroughs on the NVIDIA API catalog through the specific NIM microservices links below:

By using this software or microservice, you are agreeing to the terms and conditions of the license and acceptable use policy.

GOVERNING TERMS: The NIM container is governed by the NVIDIA Software License Agreement and Product-Specific Terms for AI Products; and use of this model is governed by the NVIDIA AI Foundation Models Community License Agreement.

ADDITIONAL Terms: Meta Llama 3 Community License, Built with Meta Llama 3.

Rapidly identify and mitigate container security vulnerabilities with generative AI.