Smaller Mixture of Experts (MoE) text-only LLM for efficient AI reasoning and math

OpenAI releases the gpt-oss family of open-weight models designed for powerful reasoning, agentic tasks, and versatile developer use cases. The family consists of the:

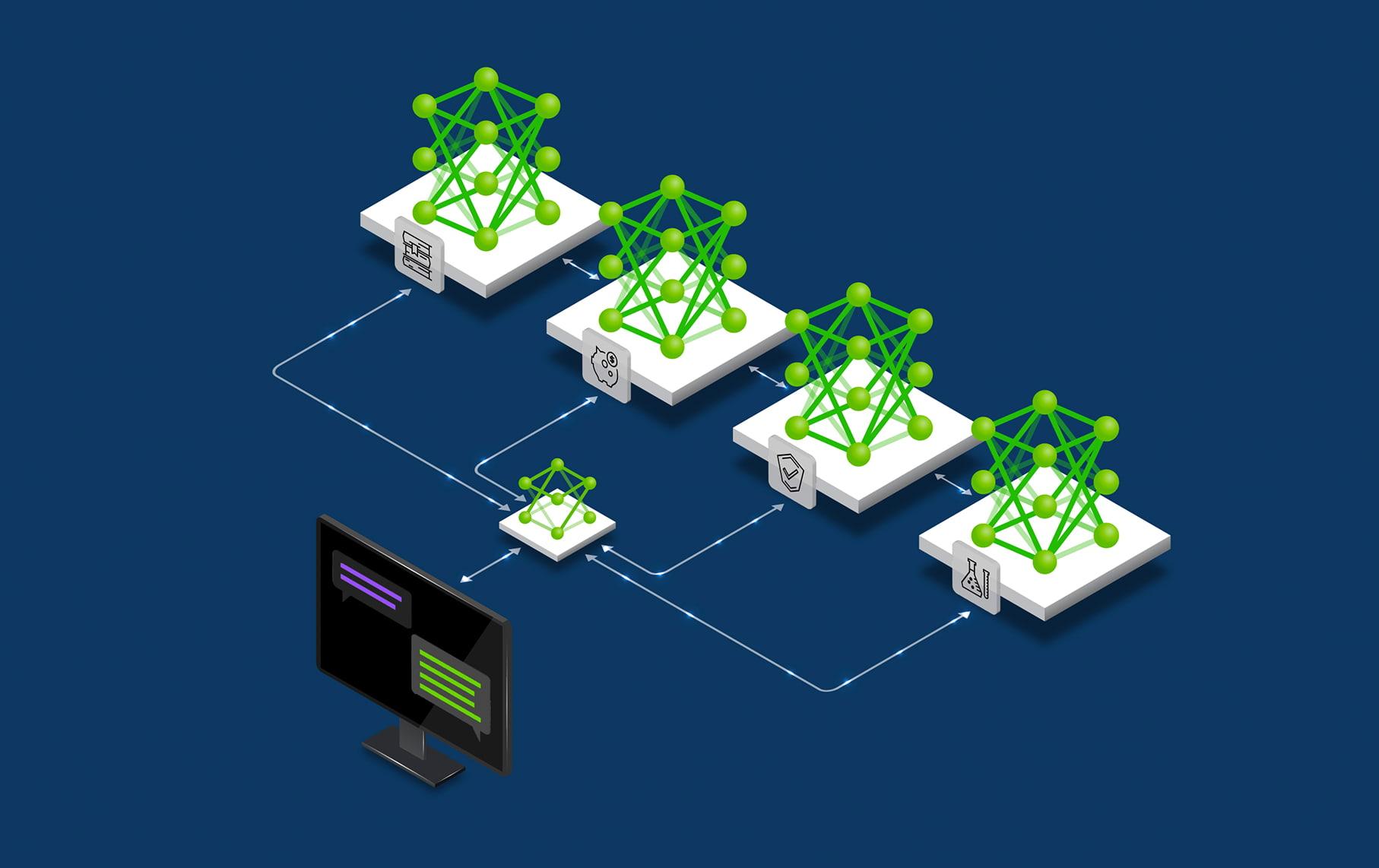

gpt-oss-120b — for production, general purpose, high reasoning use-cases that fits into a single H100 GPU (117B parameters with 5.1B active parameters)gpt-oss-20b — for lower latency, and local or specialized use-cases (21B parameters with 3.6B active parameters).The gpt-oss-20b is designed as a Mixture-of-Experts (MoE) model, structurally identical to the larger 117B variant, albeit with different hyperparameters. This model leverages SwiGLU activations and incorporates learned attention sinks within its architecture. Functionally, it serves as a robust reasoning model, supporting advanced capabilities such as chain-of-thought processing, adjustable reasoning effort levels, instruction following, and tool use. It operates strictly with text-only modalities for both input and output. A key strategic benefit is its suitability for enterprises and governments, facilitating on-premises or private cloud deployment to ensure enhanced data security and privacy.

Model Highlights:

This model is ready for commercial/non-commercial use.

This model is not owned or developed by NVIDIA. This model has been developed and built to a third-party’s requirements for this application and use case; see link to Non-NVIDIA gpt-oss-20b model card.

GOVERNING TERMS: This trial service is governed by the NVIDIA API Trial Terms of Service. Use of this model is governed by the NVIDIA Community Model License. Additional Information: Apache License Version 2.0.

Global

Intended for use as a reasoning model, offering features like chain-of-thought and adjustable reasoning effort levels. It provides comprehensive support for instruction following and tool use, fostering transparency, customization, and deployment flexibility for developers, researchers, and startups. Crucially, it enables enterprises and governments to deploy on-premises or in private clouds, ensuring stringent data security and privacy requirements are met.

Build.NVIDIA.com - 08/05/2025 via link

Hugging Face - 08/05/2025 via link

Architecture Type: Transformer

Network Architecture: Mixture-of-Experts (MoE)

Total Parameters: 20B

Active Parameters: 4B

Vocabulary Size: 201,088 (Utilizes the standard tokenizer used by GPT-4o)

Input Type(s): Text

Input Format(s): String

Input Parameters: One Dimensional (1D)

Other Properties Related to Input: Uses RoPE with a 128k context length, with attention layers alternating between full context and a sliding 128-token window. Includes a learned attention sink per-head. Employs SwiGLU activations in the MoE layers, and the router performs a Top-K operation (K=4) followed by a Sigmoid function. GEMMs in the MoE include a per-expert bias. Utilizes tiktoken for tokenization. Input Context Length (ISL): 128000

Output Type(s): Text

Output Format: String

Output Parameters: One Dimensional (1D)

Other Properties Related to Output: The model is architected to be compatible with the OpenAI Responses API and supports Structured Output, aligning with key partner expectations for advanced response formatting.

Our AI models are designed and/or optimized to run on NVIDIA GPU-accelerated systems [or name equivalent hardware preference]. By leveraging NVIDIA’s hardware (e.g. GPU cores) and software frameworks (e.g., CUDA libraries), the model achieves faster training and inference times compared to CPU-only solutions.

Runtime Engine(s):

Supported Hardware Microarchitecture Compatibility:

Operating System(s): Linux

gpt-oss-20b v1.0 (August 5, 2025)

| Benchmark | gpt-oss-120b | gpt-oss-20b |

|---|---|---|

| AIME 2024 (no tools) | 95.8 | 92.1 |

| AIME 2024 (with tools) | 96.6 | 96.0 |

| AIME 2025 (no tools) | 92.5 | 91.7 |

| AIME 2025 (with tools) | 97.9 | 98.7 |

| GPQA Diamond (no tools) | 80.1 | 71.5 |

| GPQA Diamond (with tools) | 80.9 | 74.2 |

| HLE (no tools) | 14.9 | 10.9 |

| HLE (with tools) | 19.0 | 17.3 |

| MMLU | 90.0 | 85.3 |

| SWE-Bench Verified | 62.4 | 60.7 |

| Tau-Bench Retail | 67.8 | 54.4 |

| Tau-Bench Airline | 49.2 | 38.0 |

| Aider Polyglot | 44.4 | 34.2 |

| MMMLU (Average) | 81.3 | 75.6 |

| HealthBench | 57.6 | 42.5 |

| HealthBench Hard | 30.0 | 10.8 |

| HealthBench Consensus | 89.9 | 82.6 |

| Codeforces (no tools) [elo] | 2463 | 2230 |

| Codeforces (with tools) [elo] | 2622 | 2516 |

Above scores were measured for the high reasoning level.

The following evaluations check that the model does not comply with requests for content that is disallowed under OpenAI’s safety policies, including hateful content or illicit advice.

| Category | gpt-oss-120b | gpt-oss-20b |

|---|---|---|

| hate (aggregate) | 0.996 | 0.996 |

| self-harm/intent and selfharm/instructions | 0.995 | 0.984 |

| personal data/semi restrictive | 0.967 | 0.947 |

| sexual/exploitative | 1.000 | 0.980 |

| sexual/minors | 1.000 | 0.971 |

| illicit/non-violent | 1.000 | 0.983 |

| illicit/violent | 1.000 | 1.000 |

| personal data/restricted | 0.996 | 0.978 |

Acceleration Engine: vLLM

Test Hardware: NVIDIA Hopper (H200)

The model is released with the native quantization support. Specifically, MXFP4 is used for the linear projection weights in the MoE layer. It is stored the MoE tensor in two parts:

tensor.blocks stores the actual fp4 values. Every two values are packed in one uint8 value.tensor.scales stores the block scale. The block scaling is done among the last dimension for all MXFP4 tensors.All other tensors are stored in BF16. It is recommended to use BF16 as the activation precision for the model.

NVIDIA believes Trustworthy AI is a shared responsibility and we have established policies and practices to enable development for a wide array of AI applications. When downloaded or used in accordance with our terms of service, developers should work with their internal model team to ensure this model meets requirements for the relevant industry and use case and addresses unforeseen product misuse.

Please report security vulnerabilities or NVIDIA AI Concerns here.