Reasoning vision language model (VLM) for physical AI and robotics.

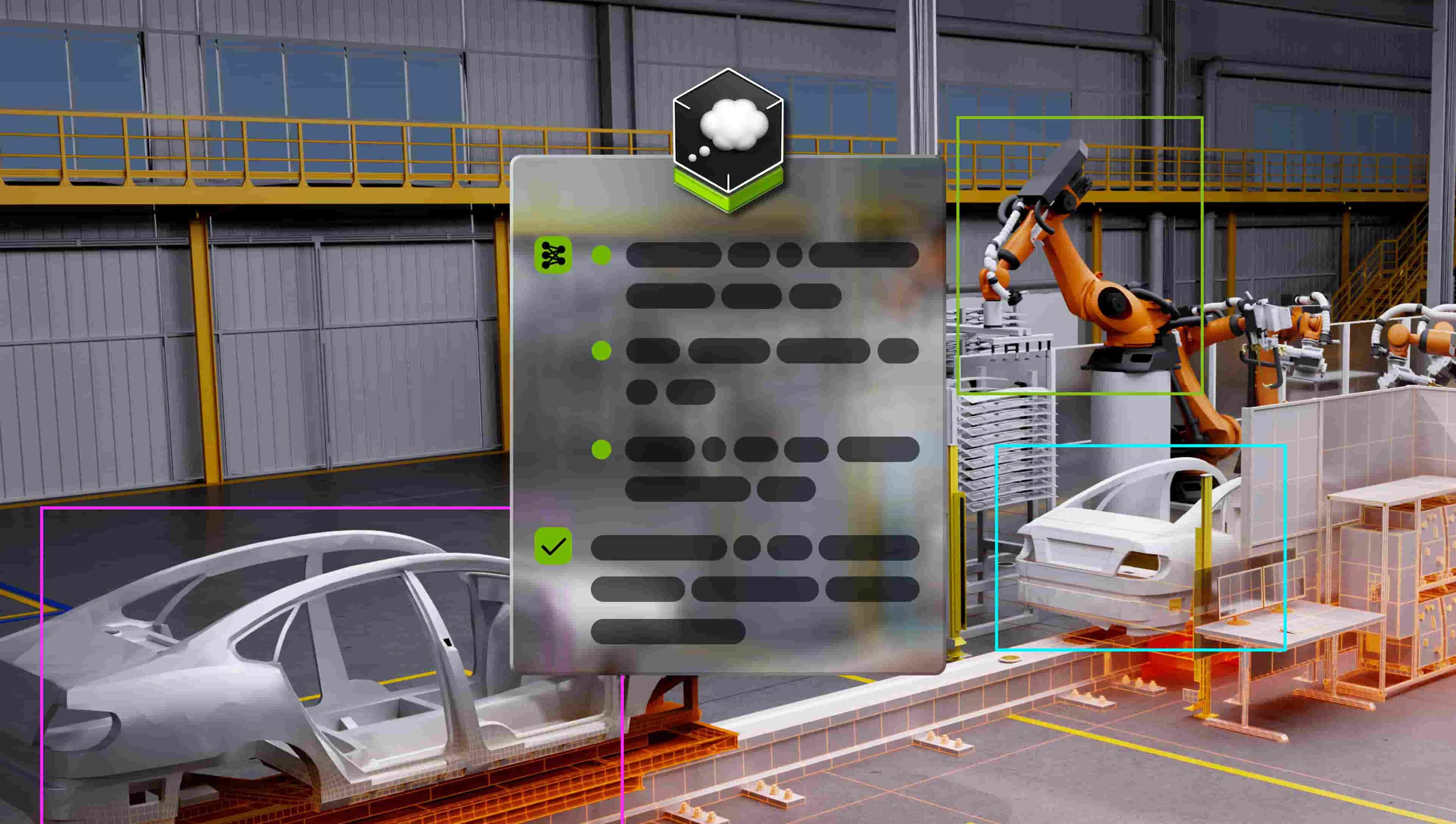

NVIDIA Cosmos Reason – an open, customizable, 7B-parameter reasoning vision language model (VLM) for physical AI and robotics - enables robots and vision AI agents to reason like humans, using prior knowledge, physics understanding and common sense to understand and act in the real world. This model understands space, time, and fundamental physics, and can serve as a planning model to reason what steps an embodied agent might take next.

Cosmos Reason excels at navigating the long tail of diverse scenarios of the physical world with spatial-temporal understanding. Cosmos Reason is post-trained with physical common sense and embodied reasoning data with supervised fine-tuning and reinforcement learning. It uses chain-of-thought reasoning capabilities to understand world dynamics without human annotations.

Given a video and a text prompt, the model first converts the video into tokens using a vision encoder and a special translator called a projector. These video tokens are combined with the text prompt and fed into the core model, which uses a mix of LLM modules and techniques. This enables the model to think step-by-step and provide detailed, logical responses.

Cosmos Reason can be used for robotics and physical AI applications including:

Data curation and annotation — Enable developers to automate high-quality curation and annotation of massive, diverse training datasets. Robot planning and reasoning — Act as the brain for deliberate, methodical decision-making in a robot vision language action (VLA) model. Now robots such as humanoids and autonomous vehicles can interpret environments and given complex commands, break them down into tasks and execute them using common sense, even in unfamiliar environments. Video analytics AI agents — Extract valuable insights and perform root-cause analysis on massive volumes of video data. These agents can be used to analyze and understand recorded or live video streams across city and industrial operations.

The model is ready for commercial use.

Model Developer: NVIDIA

GOVERNING TERMS: This trial service is governed by the NVIDIA API Trial Terms of Service. Use of the model is governed by the NVIDIA Open Model License Agreement.Additional Information: Apache License 2.0

Models are commercially usable.

You are free to create and distribute Derivative Models. NVIDIA does not claim ownership to any outputs generated using the Models or Derivative Models.

Important Note: If You bypass, disable, reduce the efficacy of, or circumvent any technical limitation, safety guardrail or associated safety guardrail hyperparameter, encryption, security, digital rights management, or authentication mechanism (collectively “Guardrail”) contained in the Model without a substantially similar Guardrail appropriate for your use case, your rights under this Agreement NVIDIA Open Model License will automatically terminate.

Global

Robotics engineers and AI researchers developing embodied agents—such as autonomous vehicles and robotic systems—would use Physical AI to equip their machines with spatiotemporal reasoning and a fundamental physics understanding for navigation, manipulation, and decision-making tasks

Build.NVIDIA.com 08/11/2025 via link

Huggingface 08/01/2025 via link

Architecture Type: A Multi-modal LLM consists of a Vision Transformer (ViT) for vision encoder and a Dense Transformer model for LLM.

Network Architecture: Qwen2.5-VL-7B-Instruct.

Cosmos-Reason-7B is post-trained based on Qwen2.5-VL-7B-Instruct and follows the same model architecture.

Number of model parameters:

Cosmos-Reason1-7B:

Computational Load:

Input Types: Text, Video

Input Formats: Text

Input Parameters: Text: One Dimensional (1D), Video

Other Input Properties:

Answer the question in the following format: <think>\nyour reasoning\n</think>\n\n<answer>\nyour answer\n</answer>. in the system prompt to encourage long chain-of-thought reasoning response.Input Context Length (ISL): 128K

Output Type: Text

Output Format: String

Output Parameters: One Dimensional (1D)

Other Output Properties:

Recommend using 4096 or more output max tokens to avoid truncation of long chain-of-thought response.

Our AI model recognizes timestamps added at the bottom of each frame for accurate temporal localization.

Our AI models are designed and/or optimized to run on NVIDIA GPU-accelerated systems. By leveraging NVIDIA's hardware (e.g. GPU cores) and software frameworks (e.g., CUDA libraries), the model achieves faster training and inference times compared to CPU-only solutions.__

Runtime Engines:

Supported Hardware:

Please see our technical paper for detailed evaluations on physical common sense and embodied reasoning. Part of the evaluation datasets are released under Cosmos-Reason1-Benchmark. The embodied reasoning datasets and benchmarks focus on the following areas: robotics (RoboVQA, BridgeDataV2, Agibot, RobFail), ego-centric human demonstration (HoloAssist), and Autonomous Vehicle (AV) driving video data. The AV dataset is collected and annotated by NVIDIA.

All datasets go through the data annotation process described in the technical paper to prepare training and evaluation data and annotations.

We enhance the model capability with the augmented training data. PLM-Video-Human and Nexar are used to enable dense temporal captioning. Describe Anything is added to enhance a set of mark (SoM) prompting. We enrich data in intelligent transportation systems (ITS) and warehouse applications.

Lastly, Visual Critics dataset contains a collection of AI generated videos from Cosmos-Predict2 and Wan2.1 with human annotations to describe the physical correctness in AI videos.

Data Collection Method by dataset:

Labeling Method by dataset:

Labeling Method by dataset:

Evaluation Benchmark Results:

We report the model accuracy on the embodied reasoning benchmark introduced in Cosmos-Reason1. The results differ from those presented in Table 9 due to additional training aimed at supporting a broader range of Physical AI tasks beyond the benchmark.

| Dataset | RoboVQA | AV | BridgeDataV2 | Agibot | HoloAssist | RoboFail | Average |

|---|---|---|---|---|---|---|---|

| Accuracy | 87.3 | 70.8 | 63.7 | 48.9 | 62.7 | 57.2 | 65.1 |

Modality: Video (mp4) and Text

We release the embodied reasoning data and benchmarks. Each data sample is a pair of video and text. The text annotations include understanding and reasoning annotations described in the Cosmos-Reason1 paper. Each video may have multiple text annotations. The quantity of the video and text pairs is described in the table below. The AV data is currently unavailable and will be uploaded soon!

| Dataset Type | RoboVQA | AV | BridgeDataV2 | Agibot | HoloAssist | RoboFail | Total Storage Size |

|---|---|---|---|---|---|---|---|

| SFT Data | 1.14m | 24.7k | 258k | 38.9k | 273k | N/A | 300.6GB |

| RL Data | 252 | 200 | 240 | 200 | 200 | N/A | 2.6GB |

| Benchmark Data | 110 | 100 | 100 | 100 | 100 | 100 | 1.5GB |

We release text annotations for all embodied reasoning datasets and videos for RoboVQA and AV datasets. For other datasets, users may download the source videos from the original data source and find corresponding video sources via the video names. The held-out RoboFail benchmark is released for measuring the generalization capability.

| Dataset Type | PLM-Video-Human | Nexar | Describe Anything | [ITS / Warehouse] | Visual Critics | Total Storage Size |

|---|---|---|---|---|---|---|

| SFT Data | 39k | 240k | 178k | 24k | 24k | 2.6TB |

Test Hardware: H100

Note: We suggest using fps=4 for the input video and max_tokens=4096 to avoid truncated response.

from transformers import AutoProcessor

from vllm import LLM, SamplingParams

from qwen_vl_utils import process_vision_info

# You can also replace the MODEL_PATH by a safetensors folder path mentioned above

MODEL_PATH = "nvidia/Cosmos-Reason1-7B"

llm = LLM(

model=MODEL_PATH,

limit_mm_per_prompt={"image": 10, "video": 10},

)

sampling_params = SamplingParams(

temperature=0.6,

top_p=0.95,

repetition_penalty=1.05,

max_tokens=4096,

)

video_messages = [

{"role": "system", "content": "You are a helpful assistant. Answer the question in the following format: <think>\nyour reasoning\n</think>\n\n<answer>\nyour answer\n</answer>."},

{"role": "user", "content": [

{"type": "text", "text": (

"Is it safe to turn right?"

)

},

{

"type": "video",

"video": "file:///path/to/your/video.mp4",

"fps": 4,

}

]

},

]

# Here we use video messages as a demonstration

messages = video_messages

processor = AutoProcessor.from_pretrained(MODEL_PATH)

prompt = processor.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True,

)

image_inputs, video_inputs, video_kwargs = process_vision_info(messages, return_video_kwargs=True)

mm_data = {}

if image_inputs is not None:

mm_data["image"] = image_inputs

if video_inputs is not None:

mm_data["video"] = video_inputs

llm_inputs = {

"prompt": prompt,

"multi_modal_data": mm_data,

# FPS will be returned in video_kwargs

"mm_processor_kwargs": video_kwargs,

}

outputs = llm.generate([llm_inputs], sampling_params=sampling_params)

generated_text = outputs[0].outputs[0].text

print(generated_text)

FPS=4 for input video to match the training setup"Answer the question in the following format: <think>\nyour reasoning\n</think>\n\n<answer>\nyour answer\n</answer>." in the system prompt to encourage long chain-of-thought reasoning responseNVIDIA believes Trustworthy AI is a shared responsibility and we have established policies and practices to enable development for a wide array of AI applications. When downloaded or used in accordance with our terms of service, developers should work with their internal model team to ensure this model meets requirements for the relevant industry and use case and addresses unforeseen product misuse.

Please report security vulnerabilities or NVIDIA AI Concerns here