An AI-powered, multi-agent system designed to optimize warehouse operations through intelligent automation, real-time monitoring, and natural language interaction.

A GenAI system that enhances and localizes product catalogs with rich text content and imagery.

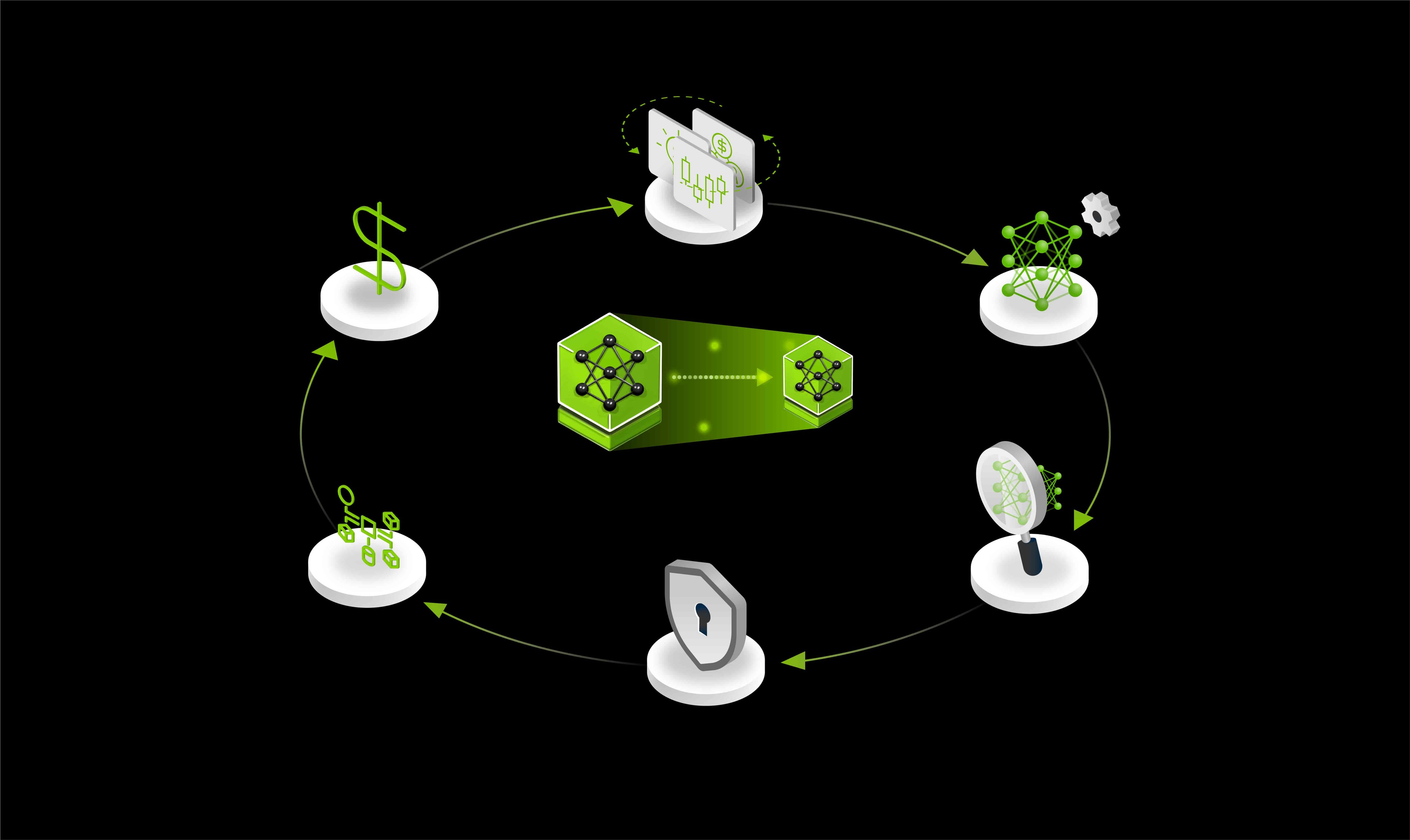

Distill and deploy domain-specific AI models from unstructured financial data to generate market signals efficiently—scaling your workflow with the NVIDIA Data Flywheel Blueprint for high-performance, cost-efficient experimentation.

Build advanced AI agents for providers and patients using this developer example powered by NeMo Microservices, NVIDIA Nemotron, Riva ASR and TTS, and NVIDIA LLM NIM

Securely extract, embed, and index multimodal data with encryption in-use for fast, accurate semantic search.

Elevate Shopping Experiences Online and In Stores.

Sensor-captured radio enables real-time awareness, AI-driven analytics for actionable, searchable insights.

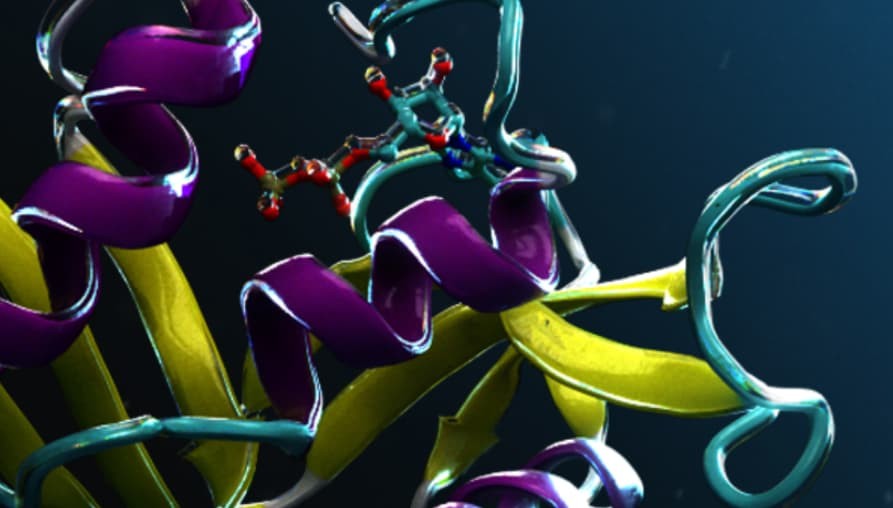

This blueprint shows how generative AI and accelerated NIM microservices can design optimized small molecules smarter and faster.

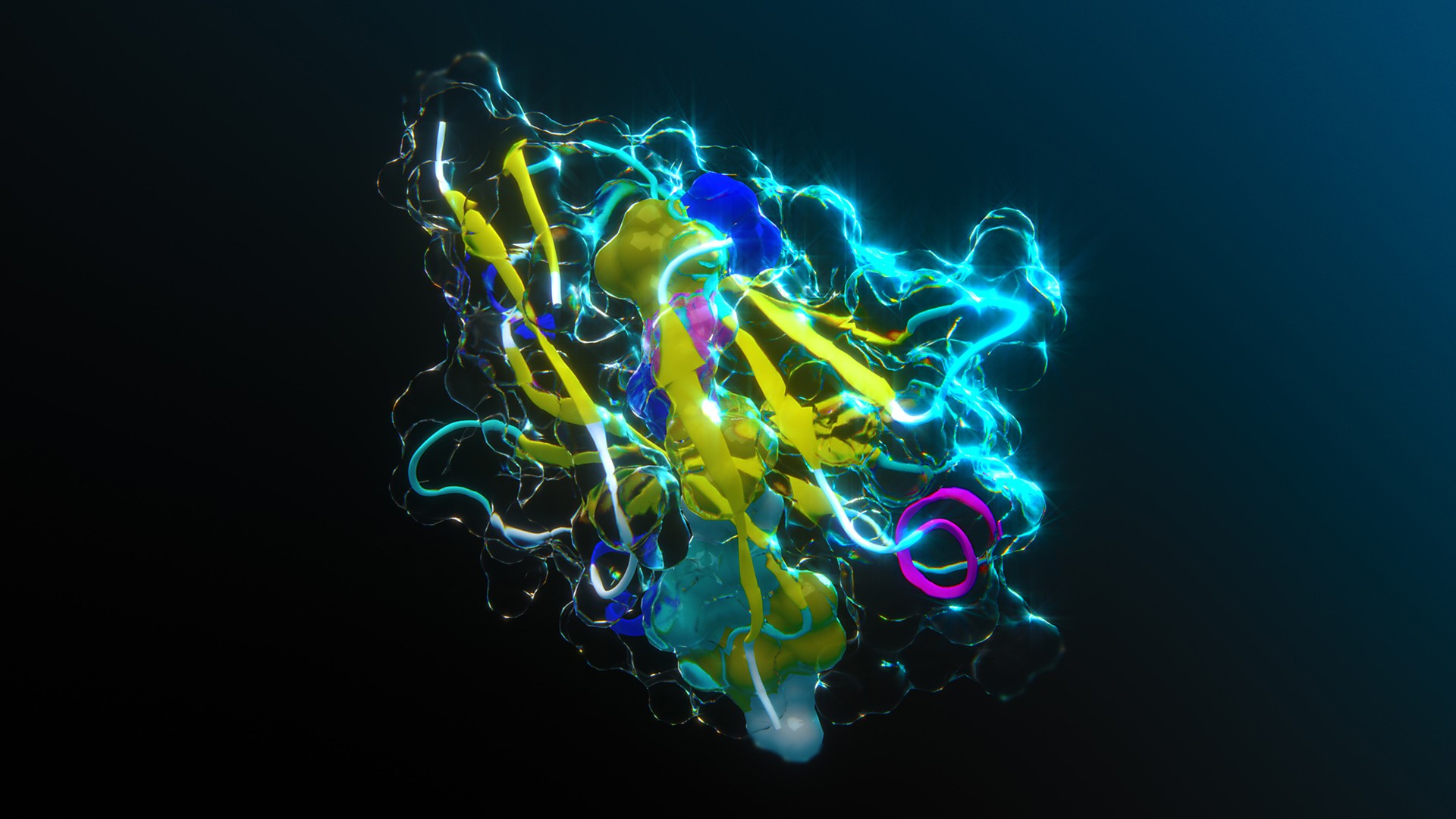

This blueprint shows how generative AI and accelerated NIM microservices can design protein binders smarter and faster.

This workflow shows how generative AI can generate DNA sequences that can be translated into proteins for bioengineering.

Build a custom enterprise research assistant powered by state-of-the-art models that process and synthesize multimodal data, enabling reasoning, planning, and refinement to generate comprehensive reports.

Use the multi-LLM compatible NIM container to deploy a broad range of LLMs from Hugging Face.

Power fast, accurate semantic search across multimodal enterprise data with NVIDIA’s RAG Blueprint—built on NeMo Retriever and Nemotron models—to connect your agents to trusted, authoritative sources of knowledge.

Build a data flywheel, with NVIDIA NeMo microservices, that continuously optimizes AI agents for latency and cost — while maintaining accuracy targets.

Automate and optimize the configuration of radio access network (RAN) parameters using agentic AI and a large language model (LLM)-driven framework.

Generate detailed, structured reports on any topic using LangGraph and Llama3.3 70B NIM.

Automate voice AI agents with NVIDIA NIM microservices and Pipecat.

Document your github repositories with AI Agents using CrewAI and Llama3.3 70B NIM.