Deploy Models Now with NVIDIA NIM

Optimized inference for the world’s leading modelsFree serverless APIs for development

Self-Host on your GPU infrastructure

Continuous vulnerability fixes

Developer examples designed for quick-start AI development in financial services, including artifacts like Docker containers and Jupyter Notebooks, allowing for fast deployment with tools like Docker compose and Brev Launchable.

Enable fast, scalable, and real-time portfolio optimization for financial institutions.

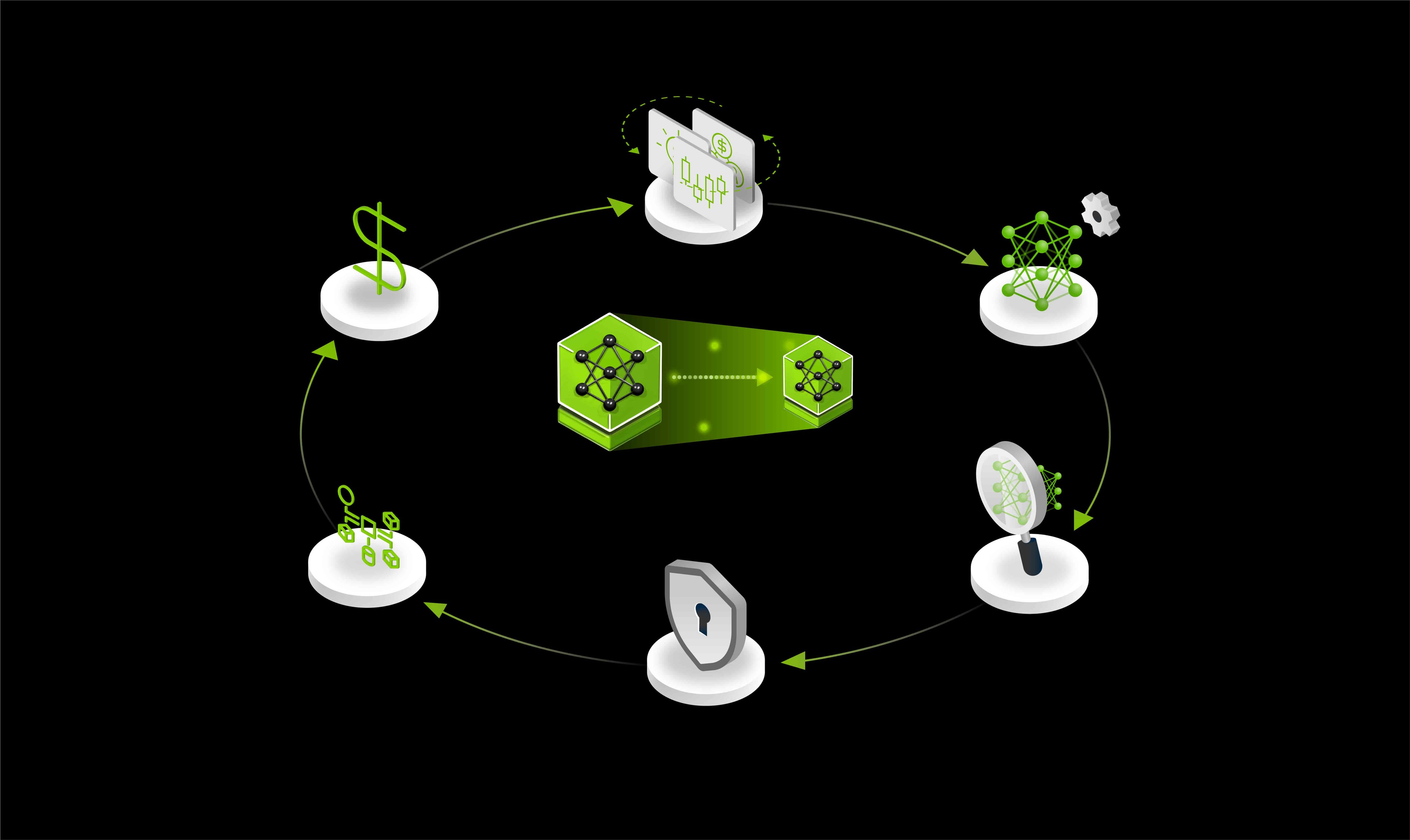

Distill and deploy domain-specific AI models from unstructured financial data to generate market signals efficiently—scaling your workflow with the NVIDIA Data Flywheel Blueprint for high-performance, cost-efficient experimentation.

Detect and prevent sophisticated fraudulent activities for financial services with high accuracy.