An AI-powered, multi-agent system designed to optimize warehouse operations through intelligent automation, real-time monitoring, and natural language interaction.

A GenAI system that enhances and localizes product catalogs with rich text content and imagery.

State-of-the-art 685B reasoning LLM with sparse attention, long context, and integrated agentic tools.

State-of-the-art open code model with deep reasoning, 256k context, and unmatched efficiency.

A state-of-the-art general purpose MoE VLM ideal for chat, agentic and instruction based use cases.

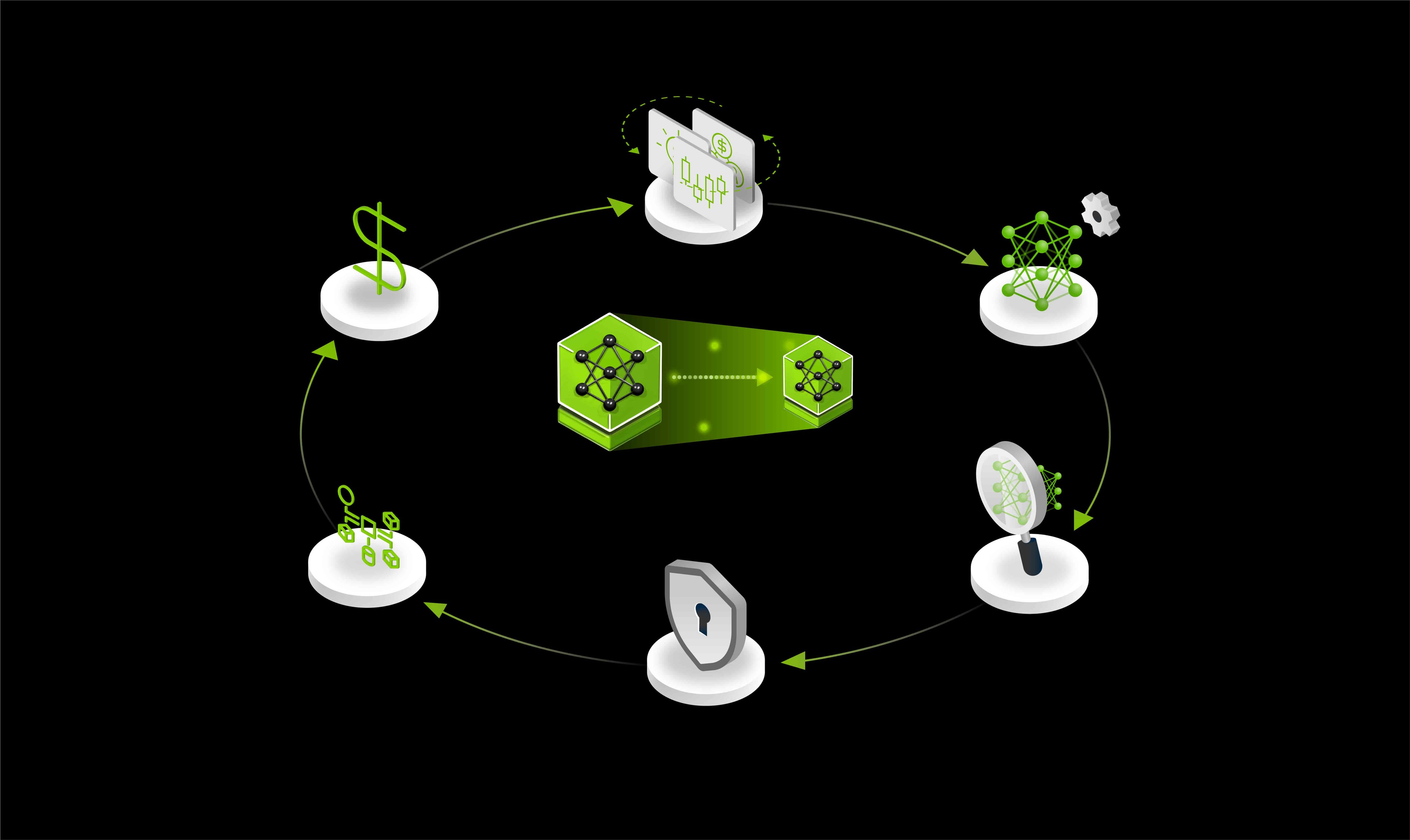

Distill and deploy domain-specific AI models from unstructured financial data to generate market signals efficiently—scaling your workflow with the NVIDIA Data Flywheel Blueprint for high-performance, cost-efficient experimentation.

Enable fast, scalable, and real-time portfolio optimization for financial institutions.

Accelerate post-training of end-to-end autonomous vehicle stacks with vector search and retrieval for large video datasets.

Open Mixture of Experts LLM (230B, 10B active) for reasoning, coding, and tool-use/agent workflows

Build advanced AI agents for providers and patients using this developer example powered by NeMo Microservices, NVIDIA Nemotron, Riva ASR and TTS, and NVIDIA LLM NIM

H2O.ai Flood Intelligence provides real-time, scalable intelligence for AI-powered disaster management.

Securely extract, embed, and index multimodal data with encryption in-use for fast, accurate semantic search.

DeepSeek-V3.1: hybrid inference LLM with Think/Non-Think modes, stronger agents, 128K context, strict function calling.

Qwen3-Next Instruct blends hybrid attention, sparse MoE, and stability boosts for ultra-long context AI.

Follow-on version of Kimi-K2-Instruct with longer context window and enhanced reasoning capabilities

ByteDance open-source LLM with long-context, reasoning, and agentic intelligence.

Transform your scene idea into ready-to-use 3D assets using Llama 3.1 8B, NV SANA, and Microsoft TRELLIS

Excels in agentic coding and browser use and supports 256K context, delivering top results.

Elevate Shopping Experiences Online and In Stores.

High‑efficiency LLM with hybrid Transformer‑Mamba design, excelling in reasoning and agentic tasks.

Sensor-captured radio enables real-time awareness, AI-driven analytics for actionable, searchable insights.

State-of-the-art open mixture-of-experts model with strong reasoning, coding, and agentic capabilities

Built for agentic workflows, this model excels in coding, instruction following, and function calling

Use the multi-LLM compatible NIM container to deploy a broad range of LLMs from Hugging Face.

Build advanced AI agents within the biomedical domain using the AI-Q Blueprint and the BioNeMo Virtual Screening Blueprint

Build a data flywheel, with NVIDIA NeMo microservices, that continuously optimizes AI agents for latency and cost — while maintaining accuracy targets.

Streamline evaluation, monitoring, and optimization of AI data flywheel with Weights & Biases.

Orchestrate AI agents for data flywheel with MLRun and NVIDIA NeMo microservices.

Improve safety, security, and privacy of AI systems at build, deploy and run stages.

Automate and optimize the configuration of radio access network (RAN) parameters using agentic AI and a large language model (LLM)-driven framework.

Detect and prevent sophisticated fraudulent activities for financial services with high accuracy.

State-of-the-art open model for reasoning, code, math, and tool calling - suitable for edge agents

Design, test, and optimize a new generation of intelligence manufacturing data centers using digital twins.

Create high quality images using Flux.1 in ComfyUI, guided by 3D.

Build a custom enterprise research assistant powered by state-of-the-art models that process and synthesize multimodal data, enabling reasoning, planning, and refinement to generate comprehensive reports.

Develop AI powered weather analysis and forecasting application visualizing multi-layered geospatial data.

Investigate, understand, and interpret single cell data in minutes, not days by leveraging RAPIDS-singlecell, powered by NVIDIA RAPIDS

Easily run essential genomics workflows to save time leveraging Parabricks

Generate exponentially large amounts of synthetic motion trajectories for robot manipulation from just a few human demonstrations.

Simulate, test, and optimize physical AI and robotic fleets at scale in industrial digital twins before real-world deployment.

Leading reasoning and agentic AI accuracy model for PC and edge.

Natural and expressive voices in multiple languages. For voice agents and brand ambassadors.

Route LLM requests to the best model for the task at hand.

This workflow shows how generative AI can generate DNA sequences that can be translated into proteins for bioengineering.

Latency-optimized language model excelling in code, math, general knowledge, and instruction-following.

Power fast, accurate semantic search across multimodal enterprise data with NVIDIA’s RAG Blueprint—built on NeMo Retriever and Nemotron models—to connect your agents to trusted, authoritative sources of knowledge.

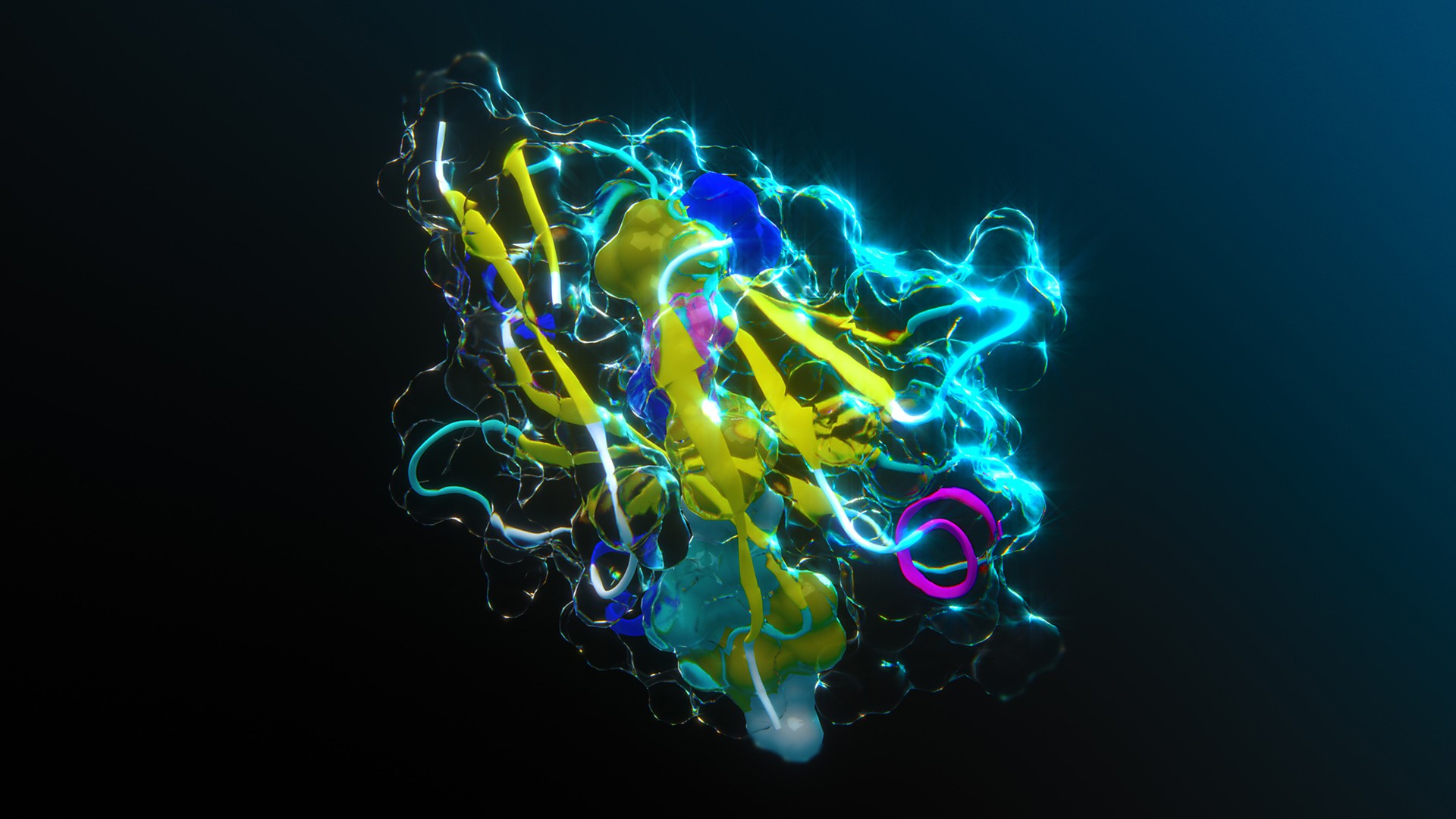

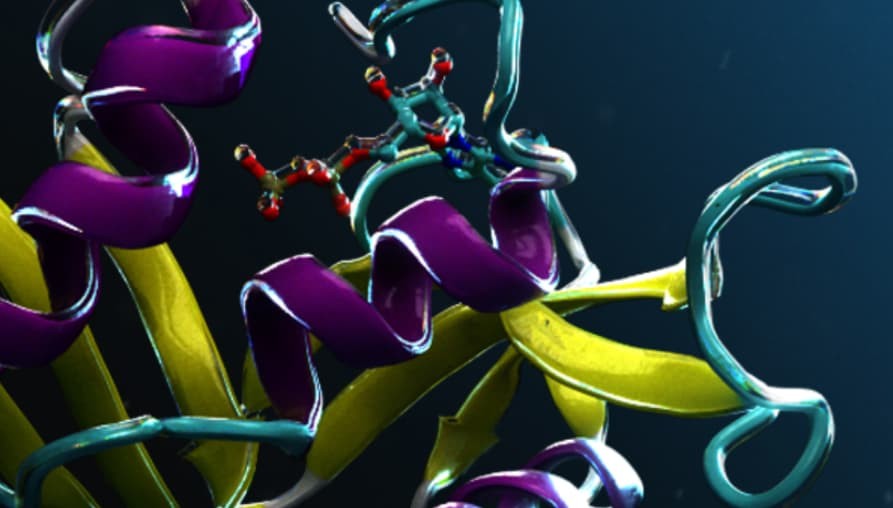

This blueprint shows how generative AI and accelerated NIM microservices can design protein binders smarter and faster.

Transform PDFs into AI podcasts for engaging on-the-go audio content.

Trace and evaluate AI Agents with Weights & Biases.

Automate voice AI agents with NVIDIA NIM microservices and Pipecat.

Generate detailed, structured reports on any topic using LangGraph and Llama3.3 70B NIM.

Document your github repositories with AI Agents using CrewAI and Llama3.3 70B NIM.

This NVIDIA Omniverse™ Blueprint demonstrates how commercial software vendors can create interactive digital twins.

Ingest massive volumes of live or archived videos and extract insights for summarization and interactive Q&A

Enhance and modify high-quality compositions using real-time rendering and generative AI output without affecting a hero product asset.

Create intelligent virtual assistants for customer service across every industry

Rapidly identify and mitigate container security vulnerabilities with generative AI.

This blueprint shows how generative AI and accelerated NIM microservices can design optimized small molecules smarter and faster.