An AI-powered, multi-agent system designed to optimize warehouse operations through intelligent automation, real-time monitoring, and natural language interaction.

Vision language model that excels in understanding the physical world using structured reasoning on videos or images.

State-of-the-art 685B reasoning LLM with sparse attention, long context, and integrated agentic tools.

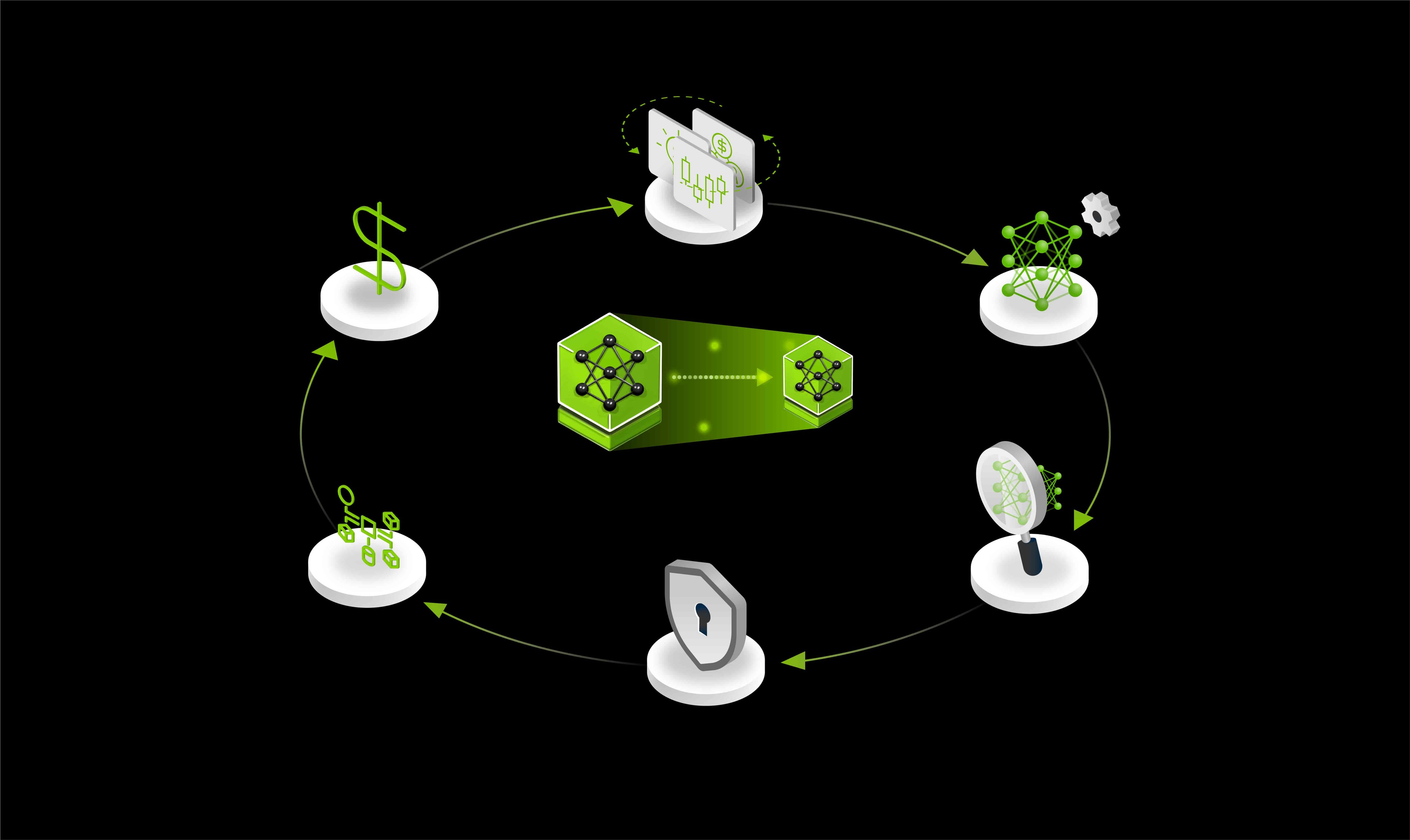

Distill and deploy domain-specific AI models from unstructured financial data to generate market signals efficiently—scaling your workflow with the NVIDIA Data Flywheel Blueprint for high-performance, cost-efficient experimentation.

StreamPETR offers efficient 3D object detection for autonomous driving by propagating sparse object queries temporally.

Accelerate post-training of end-to-end autonomous vehicle stacks with vector search and retrieval for large video datasets.

Build advanced AI agents for providers and patients using this developer example powered by NeMo Microservices, NVIDIA Nemotron, Riva ASR and TTS, and NVIDIA LLM NIM

H2O.ai Flood Intelligence provides real-time, scalable intelligence for AI-powered disaster management.

DeepSeek-V3.1: hybrid inference LLM with Think/Non-Think modes, stronger agents, 128K context, strict function calling.

Japanese-specialized large-language-model for enterprises to read and understand complex business documents.

Qwen3-Next Instruct blends hybrid attention, sparse MoE, and stability boosts for ultra-long context AI.

State-of-the-art model for Polish language processing tasks such as text generation, Q&A, and chatbots.

80B parameter AI model with hybrid reasoning, MoE architecture, support for 119 languages.

Transform your scene idea into ready-to-use 3D assets using Llama 3.1 8B, NV SANA, and Microsoft TRELLIS

DeepSeek V3.1 Instruct is a hybrid AI model with fast reasoning, 128K context, and strong tool use.

Reasoning vision language model (VLM) for physical AI and robotics.

Smaller Mixture of Experts (MoE) text-only LLM for efficient AI reasoning and math

Sensor-captured radio enables real-time awareness, AI-driven analytics for actionable, searchable insights.

Multilingual 7B LLM, instruction-tuned on all 24 EU languages for stable, culturally aligned output.

Generates high-quality numerical embeddings from text inputs.

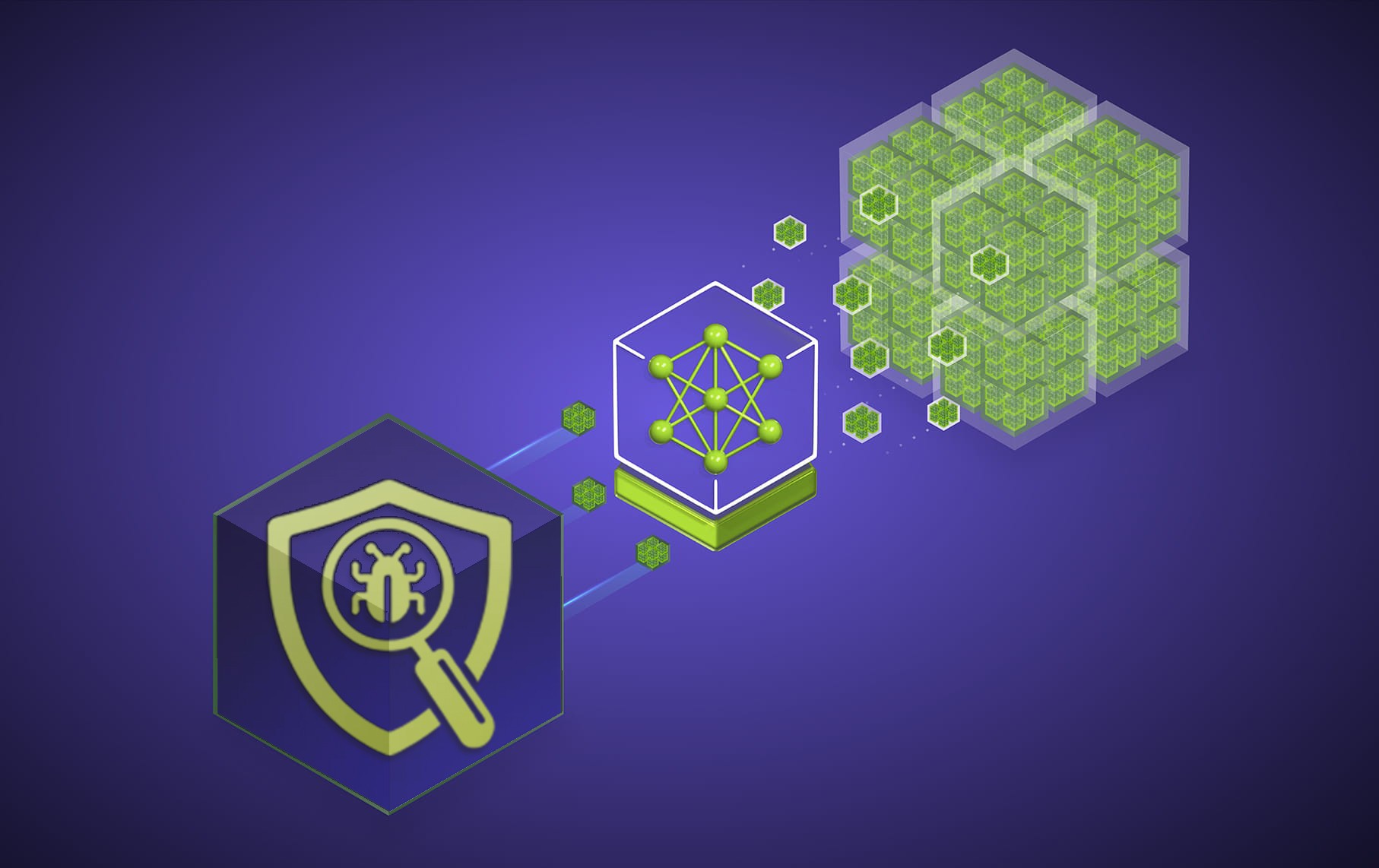

Rapidly identify and mitigate container security vulnerabilities with generative AI.

Ingest massive volumes of live or archived videos and extract insights for summarization and interactive Q&A

Fine-tuned reranking model for multilingual, cross-lingual text question-answering retrieval, with long context support.

English text embedding model for question-answering retrieval.

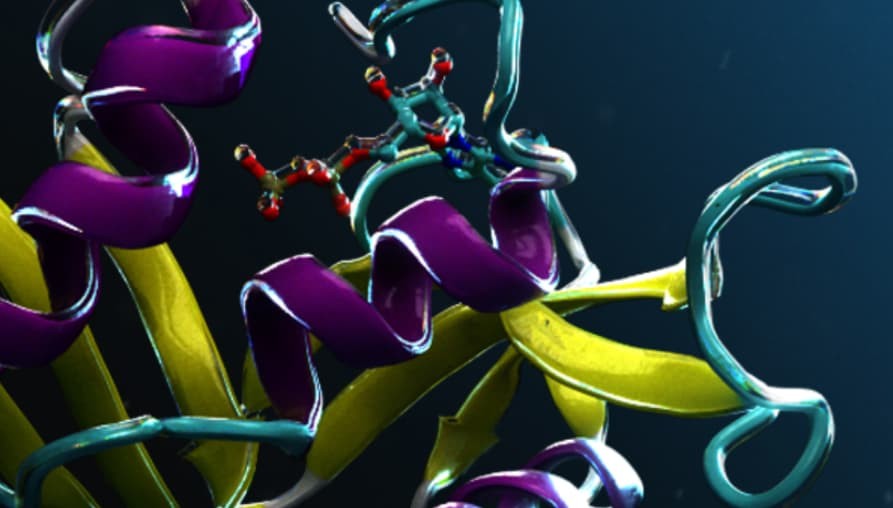

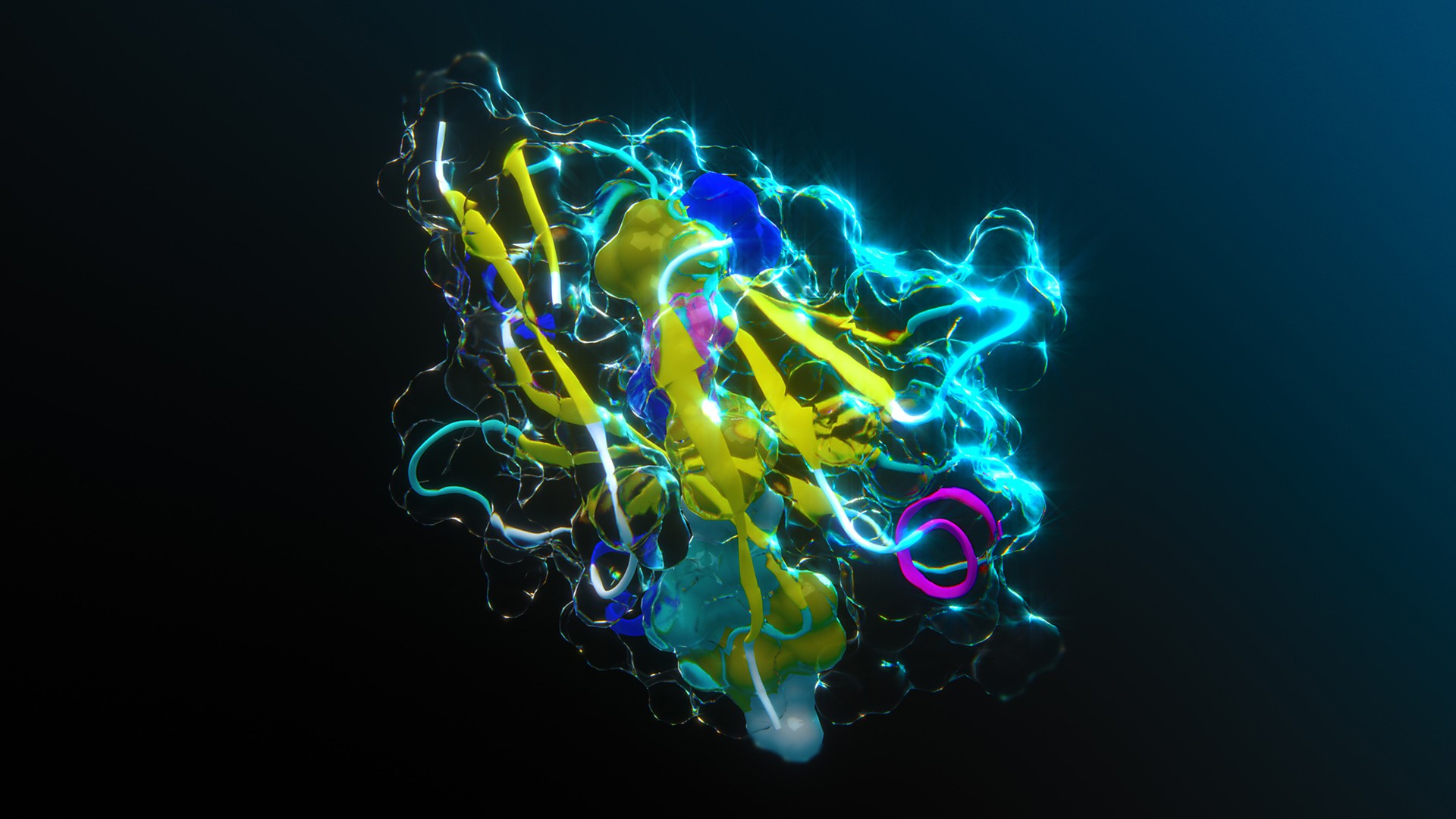

This blueprint shows how generative AI and accelerated NIM microservices can design optimized small molecules smarter and faster.

Advanced reasoing MOE mode excelling at reasoning, multilingual tasks, and instruction following

Multilingual and cross-lingual text question-answering retrieval with long context support and optimized data storage efficiency.

This blueprint shows how generative AI and accelerated NIM microservices can design protein binders smarter and faster.

This workflow shows how generative AI can generate DNA sequences that can be translated into proteins for bioengineering.

End-to-end autonomous driving stack integrating perception, prediction, and planning with sparse scene representations for efficiency and safety.

An MOE LLM that follows instructions, completes requests, and generates creative text.

An MOE LLM that follows instructions, completes requests, and generates creative text.

Improve safety, security, and privacy of AI systems at build, deploy and run stages.

State-of-the-art, high-efficiency LLM excelling in reasoning, math, and coding.

Build a custom enterprise research assistant powered by state-of-the-art models that process and synthesize multimodal data, enabling reasoning, planning, and refinement to generate comprehensive reports.

An edge computing AI model which accepts text, audio and image input, ideal for resource-constrained environments

An edge computing AI model which accepts text, audio and image input, ideal for resource-constrained environments

Create intelligent virtual assistants for customer service across every industry

Power fast, accurate semantic search across multimodal enterprise data with NVIDIA’s RAG Blueprint—built on NeMo Retriever and Nemotron models—to connect your agents to trusted, authoritative sources of knowledge.

Model for object detection, fine-tuned to detect charts, tables, and titles in documents.

Distilled version of Llama 3.1 8B using reasoning data generated by DeepSeek R1 for enhanced performance.

Design, test, and optimize a new generation of intelligence manufacturing data centers using digital twins.

Orchestrate AI agents for data flywheel with MLRun and NVIDIA NeMo microservices.

Build a data flywheel, with NVIDIA NeMo microservices, that continuously optimizes AI agents for latency and cost — while maintaining accuracy targets.

Multi-modal model to classify safety for input prompts as well output responses.

Generates physics-aware video world states for physical AI development using text prompts and multiple spatial control inputs derived from real-world data or simulation.

Leading reasoning and agentic AI accuracy model for PC and edge.

Multimodal question-answer retrieval representing user queries as text and documents as images.

SOTA LLM pre-trained for instruction following and proficiency in Indonesian language and its dialects.

Automate and optimize the configuration of radio access network (RAN) parameters using agentic AI and a large language model (LLM)-driven framework.

State-of-the-art, multilingual model tailored to all 24 official European Union languages.

Create high quality images using Flux.1 in ComfyUI, guided by 3D.

FLUX.1-schnell is a distilled image generation model, producing high quality images at fast speeds

Updated version of DeepSeek-R1 with enhanced reasoning, coding, math, and reduced hallucination.

Build advanced AI agents within the biomedical domain using the AI-Q Blueprint and the BioNeMo Virtual Screening Blueprint

Streamline evaluation, monitoring, and optimization of AI data flywheel with Weights & Biases.

Simulate, test, and optimize physical AI and robotic fleets at scale in industrial digital twins before real-world deployment.

The NV-EmbedCode model is a 7B Mistral-based embedding model optimized for code retrieval, supporting text, code, and hybrid queries.

Route LLM requests to the best model for the task at hand.

Generate exponentially large amounts of synthetic motion trajectories for robot manipulation from just a few human demonstrations.

Lightweight multilingual LLM powering AI applications in latency bound, memory/compute constrained environments

Enhance and modify high-quality compositions using real-time rendering and generative AI output without affecting a hero product asset.

Distilled version of Qwen 2.5 14B using reasoning data generated by DeepSeek R1 for enhanced performance.

Distilled version of Qwen 2.5 32B using reasoning data generated by DeepSeek R1 for enhanced performance.

Advanced small language generative AI model for edge applications

Distilled version of Qwen 2.5 7B using reasoning data generated by DeepSeek R1 for enhanced performance.

Sovereign AI model finetuned on Traditional Mandarin and English data using the Llama-3 architecture.

Cutting-edge MOE based LLM designed to excel in a wide array of generative AI tasks.

Sovereign AI model trained on Japanese language that understands regional nuances.

Sovereign AI model trained on Japanese language that understands regional nuances.

Sovereign AI model trained on Japanese language that understands regional nuances.

Generate detailed, structured reports on any topic using LangGraph and Llama3.3 70B NIM.

Efficient multimodal model excelling at multilingual tasks, image understanding, and fast-responses

Develop AI powered weather analysis and forecasting application visualizing multi-layered geospatial data.

Automate voice AI agents with NVIDIA NIM microservices and Pipecat.

Document your github repositories with AI Agents using CrewAI and Llama3.3 70B NIM.

Transform PDFs into AI podcasts for engaging on-the-go audio content.

Trace and evaluate AI Agents with Weights & Biases.

Grounding dino is an open vocabulary zero-shot object detection model.

Topic control model to keep conversations focused on approved topics, avoiding inappropriate content.

FourCastNet predicts global atmospheric dynamics of various weather / climate variables.

Advanced AI model detects faces and identifies deep fake images.

Robust image classification model for detecting and managing AI-generated content.

Generates future frames of a physics-aware world state based on simply an image or short video prompt for physical AI development.

Lightweight multilingual LLM powering AI applications in latency bound, memory/compute constrained environments

Context-aware chart extraction that can detect 18 classes for chart basic elements, excluding plot elements.

Visual Changenet detects pixel-level change maps between two images and outputs a semantic change segmentation mask

EfficientDet-based object detection network to detect 100 specific retail objects from an input video.